Nature Knows and Psionic Success

God provides

Promising Findings – Scientists Successfully Revive Lost Brain Function Following Stroke

Researchers have discovered a potential stroke recovery therapy that can restore brain function in mice after a stroke. This therapy, involving inhibitors of the metabotropic glutamate receptor mGluR5, has shown to improve sensorimotor functions even when administered days after a stroke. The study suggests that combined with rehabilitation training, this treatment could offer a new approach to stroke recovery. However, further research, including human trials, is necessary. Researchers have successfully revived brain function in mice affected by stroke through the use of small molecules, which could potentially be developed into a stroke recovery therapy.

“Communication between nerve cells in large parts of the brain changes after a stroke and we show that it can be partially restored with the treatment,” says Tadeusz Wieloch, senior professor of neurobiology at Lund University in Sweden.

“Concomitantly, the rodents regain lost somatosensory functions, something that around 60 percent of all stroke patients experience today. The most remarkable result is that the treatment began several days after a stroke,” Wieloch continues. Understanding Stroke and Current Treatment Limitations

In an ischemic stroke, lack of blood flow to the brain causes damage, which rapidly leads to nerve cell loss that affects large parts of the vast network of nerve cells in the brain. This may lead to loss of function such as paralysis, sensorimotor impairment, and vision and speech difficulties, but also to pain and depression.

There are currently no approved drugs that improve or restore the functions after a stroke, apart from clot-dissolving treatment in the acute phase (within 4.5 hours of the stroke). Some spontaneous improvements occur, but many stroke patients suffer chronic loss of function. For example, about 60 percent of stroke sufferers, experience lost somatosensory functions such as touch and position sense. Promising Results from a Recent Study

An international study published recently in the journal Brain and led by a research team from Lund University in collaboration with University of Rome La Sapeinza and Washington University at St. Louis, shows promising results in mice and rats that were treated with a class of substances that inhibit the metabotropic glutamate receptor (mGluR5), a receptor that regulates communication in the brain’s nerve cell network.

“Rodents treated with the GluR5 inhibitor regained their somatosensory functions,” says Tadeusz Wieloch, who led the study published in BRAIN. Treatment Timing and Effects

Two days after the stroke, i.e. when the damage had developed and function impairment was most prominent, the researchers started treating the rodents that exhibited the greatest impaired function.

“A temporary treatment effect was seen after just 30 minutes, but treatment for several weeks is needed to achieve a permanent recovery effect. Some function improvement was observed even when the treatment started 10 days after a stroke,” says Tadeusz Wieloch.

Importantly, sensorimotor functions improved, even though the extent of the brain damage was not diminished. This, explains Tadeusz Wieloch, is due to the intricate network of nerve cells in the brain, known as the connectome, i.e. how various areas of the brain are connected and communicate with each other to form the basis for various brain functions.

“Impaired function after a stroke is due to cell loss, but also because of reduced activity in large parts of the connectome in the undamaged brain. The receptor mGluR5 is apparently an important factor in the reduced activity in the connectome, which is prevented by the inhibitor which therefore restores the lost brain function,” says Tadeusz Wieloch. Enhanced Recovery with Combined Approaches

The results also showed that sensorimotor function was further improved if treatment with the mGluR5 inhibitor was combined with somatosensory training by housing several rodents in cages enriched with toys, chains, grids, and plastic tubes.

The researchers hope that in the future their results could lead to a clinical treatment that could be initiated a few days after an ischemic stroke.

“Combined with rehabilitation training, it could eventually be a new promising treatment. However, more studies are needed. The study was conducted on mice and rats, and of course, needs to be repeated in humans. This should be possible since several mGluR5 inhibitors have been studied in humans for the treatment of neurological diseases other than stroke, and shown to be tolerated by humans,” says Tadeusz Wieloch.

Reference: “Inhibiting metabotropic glutamate receptor 5 after stroke restores brain function and connectivity” by Jakob Hakon, Miriana J Quattromani, Carin Sjölund, Daniela Talhada, Byungchan Kim, Slavianka Moyanova, Federica Mastroiacovo, Luisa Di Menna, Roger Olsson, Elisabet Englund, Ferdinando Nicoletti, Karsten Ruscher, Adam Q Bauer and Tadeusz Wieloch, 01 September 2023, Brain .

DOI: 10.1093/brain/awad293

The research is conducted with support from the Swedish Research Council, Alborada Trust, Hans-Gabriel and Alice Wachtmeister Foundation, and Multipark Strategic Research Area.

Multivitamins May Boost Memory and Brain Health in Older Adults

What if you could boost your memory and slow cognitive aging with a single supplement? According to a major new study, you can.

Multivitamins are the most commonly used dietary supplements in the U.S., with 40 percent of those over the age of 60 taking them on a regular basis. They provide a useful route to obtaining the recommended daily amounts of essential vitamins and minerals, especially when an individual cannot meet these needs from their diet alone.

“Vitamins and minerals contribute to the maintenance of neuronal membranes, neurotransmitter release, and protection against oxidative stress, collectively supporting cognitive health,” Chirag Vyas, an instructor in psychiatry at Mass General Research Institute and Harvard Medical School, told Newsweek .

“Several vitamins and minerals are known to be essential for optimal brain function, and a deficiency of one or more of those micronutrients may accelerate cognitive aging,” Vyas, who is the study’s first author, said. “Many in the older population have deficiencies in one or more important micronutrients due to a variety of health reasons. Vitamin B12, vitamin D, lutein, and zinc are top candidates for better cognitive function, but there might be others or how they work together that are also important.” A stock image shows a woman holding a pill and a glass of water. Taking a daily multivitamin may help slow cognitive decline, scientists say. fizkes/Getty When it comes to cognitive aging, Alzheimer’s is the most common form of dementia in the United States, affecting roughly 5.8 million Americans, according to the U.S. Centers for Disease Control and Prevention. The progressive condition is characterized by memory loss and cognitive decline in the regions of the brain involved in thought, memory and language. Therefore, finding daily habits that can slow this decline could significantly boost public health across America.

Newsletter

The Bulletin

By clicking on SIGN ME UP, you agree to Newsweek’s Terms of Use & Privacy Policy . You may unsubscribe at any time.

To explore the benefits of supplementation on older adults, researchers at Mass General Brigham directed a large-scale, nationwide randomized controlled trial called the Cocoa Supplement and Multivitamin Outcomes Study (COSMOS.) The trial involved more than 21,000 participants over the age of 60 and studied the effects of cocoa extract and multivitamins on cardiovascular health, cancer risk and cognition aging.

The COSMOS trial has already demonstrated in two separate studies that taking a daily multivitamin can have positive effects on cognitive health. This third study, published on January 18 in the American Journal of Clinical Nutrition , focuses on a subset group of 573 participants who underwent in-person cognitive assessments.

“Based on our combined analysis of three separate cognition studies within COSMOS, a daily multivitamin containing 20 or more micronutrients slowed cognitive aging by the equivalent of two years compared to placebo,” Vyas said. It is important to add here that multivitamins should not be taken as a substitute for a balanced diet but rather as a complement.

Sign up for Newsweek’s daily headlines

“It is generally recommended to maintain a healthy diet to ensure getting a range of essential micronutrients,” Vyas said.

It is also possible to overdose on certain vitamins, particularly if you are pregnant or have a medical condition, so it is always best to speak to your doctor before starting any new supplements.

More research is required to identify exactly which vitamins and minerals may be responsible for the positive effects identified in the research and which groups of individuals might benefit most from supplementation, but the studies promise an effective, low-cost strategy for slowing the advance of cognitive aging in older adults.

Is there a health problem that’s worrying you? Do you have a question about Alzheimer’s ? Let us know via health@newsweek.com. We can ask experts for advice, and your story could be featured in Newsweek.

Request Reprint & Licensing Submit Correction View Editorial Guidelines

New online study explores link between healthy brains and bodies

Credit: Ketut Subiyanto from Pexels So little is understood about the dialogue between the body and the brain. It might seem obvious that our physical state can affect our ability to think, but there are many fundamental questions neuroscientists would still like to answer—with your help.

A new Western-led study officially launched today that explores the elusive relationship between physical and cognitive health .

Driven by a pioneering series of online brain games, the study will provide invaluable data and insight for world-renowned neuroscientist Adrian Owen and his team to discover more about the links between the body and the brain.

“What we hope to do is to establish definitively whether exercise is beneficial for cognition function, and if so, which cognitive functions benefit most,” said Owen, professor of cognitive neuroscience and imaging at Western’s Schulich School of Medicine & Dentistry and the department of psychology in the Faculty of Social Science. “We will also look at how this may vary across the lifespan. If exercise is good for your brain, does it confer the same benefits in the old and the young?”

Each completed online survey also provides participants with instant results about how their own brain and body functions while Owen and his fellow neuroscientists can identify activities and lifestyle habits that could improve or maintain life-long functioning of the brain.

“We are also going to examine whether playing highly immersive video games can improve your cognitive function. So far, the scientific community is divided on the issue and we want to conduct the definitive study that really gets to the truth.”

For the study, Owen has partnered with the award-winning Science and Industry Museum (Manchester, U.K.), the findings are set to be shared at this year’s Manchester Science Festival, which returns Oct. 18–27, 2024.

“Manchester Science Festival is one of the most popular events of its kind in the U.K. and we are really excited by the thought of using this mass experiment to help shape the program this year and find out new things about how our brains affect our bodies and vice versa,” said Owen.

People living longer, an aging population and a recent study suggesting the COVID-19 pandemic has impacted brain power in people aged 50 and over, all make long-term cognitive and physical health increasingly important. Completing the survey will help neuroscientists build a better understanding of how lifestyle factors relate to the health of our brains across our lifetimes and could in future years support individuals to choose activities that promote healthy cognitive aging.

Owen led a previous study of 11,000 people, published in the journal Nature , which showed that computerized brain training games did not improve cognitive function. This new study is different in that participants now play highly immersive video games.

Will there be a difference? That is what Owen and the team hope to find out. Answer biggest questions facing our planet

This year’s Manchester Science Festival, produced by the Science and Industry Museum, will explore “Extremes” and offers the opportunity to get hands- on with some of science’s most cutting-edge developments. Festival-goers will explore some of the biggest questions facing our planet through multi-sensory experiences, immersive performances, and hands-on activities.

“We can’t wait for the festival’s return this October. It is a brilliant opportunity to bring together visitors of all ages and interests to be inspired by science in action, and a wonderful way to showcase Manchester’s long-standing position as a leader in progress and innovation,” said Sally MacDonald, director of the Science and Industry Museum.

“We are delighted to be launching the festival with the pioneering Brain and Body study and giving more people the unique opportunity to be part of contemporary developments in science and play a role in furthering scientific knowledge to benefit our collective future.”

Roger Highfield, science director at the Science Museum Group, worked with Owen previously on a mass online survey about IQ, so when the Manchester Science Festival was looking for a partner to study the body and the brain, he knew where to go.

“The survey is huge fun to do, will earn you entry to a prize draw and supports important neuroscientific research—taking part is a no-brainer,” said Highfield.

The survey can be completed online ( www.brainbodystudy.com ) using a desktop, laptop, or tablet, though it is not possible to take part in the survey using a phone. It takes around 75 minutes to complete, includes fun brain games and cognitive challenges, and at the end will share results with the participant. Anyone who signs up will have a chance to win Amazon vouchers (equivalent to $100CAD each).

Provided by University of Western Ontario

Cannabis found to activate specific hunger neurons in brain

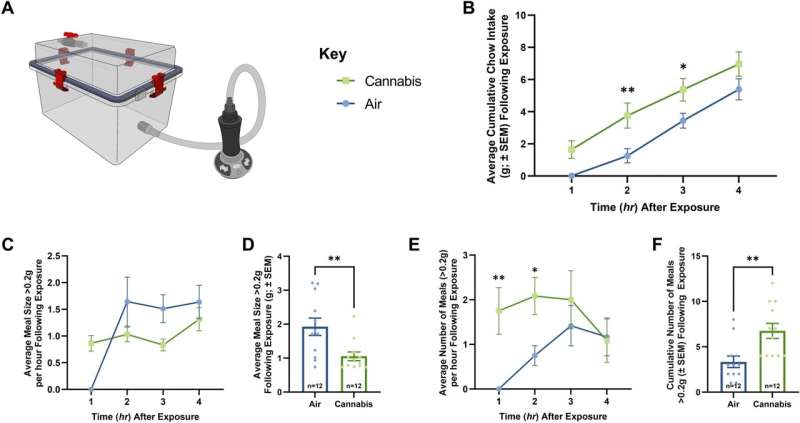

Vapor Cannabis Exposure Augments Meal Frequency. (A) Illustrates the vapor chamber apparatus used in the following studies. (B), (C), and (E) Represent quantification of ingested chow at 1-h intervals following air or cannabis exposure (n = 12/group), significance calculated with two-way repeated measures ANOVA with Tukey HSD post-hoc testing. (D) and (F) Represent quantification average and cumulative meal patterns (n = 12/group), significance calculated with one-way ANOVA test. (B) shows free-feeding rats exposed to 800 mg of cannabis consumed significantly more chow than air rats over 4 h. (C) Displays the average meal size per hour following cannabis and air exposure, statistical testing revealed a significance in the within subjects’ comparison. Meal pattern analysis revealed that vapor cannabis exposed rats consumed smaller meals relative to air-exposed controls (D), and that vapor cannabis exposure led to a trend toward increased total number of meals relative to air-exposed rats by hour (E), means comparison revealed significant differences at the 1 and 2 h timepoints and in total (F). See Supplemental Tables S1 and S2 for ANOVA testing results and post-hoc analysis results respectively. Credit: Scientific Reports (2023). DOI: 10.1038/s41598-023-50112-5 While it is well known that cannabis can cause the munchies, researchers have now revealed a mechanism in the brain that promotes appetite in a set of animal studies at Washington State University.

The discovery, detailed in the journal Scientific Reports , could pave the way for refined therapeutics to treat appetite disorders faced by cancer patients as well as anorexia and potentially obesity.

After exposing mice to vaporized cannabis sativa , researchers used calcium imaging technology, which is similar to a brain MRI, to determine how their brain cells responded. They observed that cannabis activated a set of cells in the hypothalamus when the rodents anticipated and consumed palatable food that were not activated in unexposed mice.

“When the mice are given cannabis, neurons come on that typically are not active,” said Jon Davis, an assistant professor of neuroscience at WSU and corresponding author on the paper. “There is something important happening in the hypothalamus after vapor cannabis.”

Calcium imaging has been used to study the brain’s reactions to food by other researchers, but this is the first known study to use it to understand those features following cannabis exposure.

As part of this research, the researchers also determined that the cannabinoid-1 receptor, a known cannabis target, controlled the activity of a well-known set of “feeding” cells in the hypothalamus, called Agouti Related Protein neurons. With this information, they used a “chemogenetic” technique, which acts like a molecular light switch, to home in on these neurons when animals were exposed to cannabis. When these neurons were turned off, cannabis no longer promoted appetite.

“We now know one of the ways that the brain responds to recreational-type cannabis to promote appetite,” said Davis.

This work builds on previous research on cannabis and appetite from Davis’ lab, which was among the first to use whole vaporized cannabis plant matter in animal studies instead of injected THC—in an effort to mimic better how cannabis is used by humans. In the previous work, the researchers identified genetic changes in the hypothalamus in response to cannabis, so in this study, Davis and his colleagues focused on that area.

Provided by Washington State University

Research shows Lion’s mane mushroom can combat dementia and cognitive decline

Currently, there are more than 55 million people who suffer from dementia worldwide, and nearly 10 million new cases of dementia are diagnosed each year . Cognitive decline has become such a pervasive issue in modern society; it has become normalized across the political spectrum. Some of today’s government officials show serious cognitive decline, and even the de facto President of the United States routinely stumbles around in a stupor, taking cues from handlers and mumbling incoherently at times.

Cognitive decline is a serious health issue worldwide, but in many cases, there are ways to reverse the damage, prevent the death of neurons and regenerate neuronal pathways. Lion’s mane mushroom is an important medicinal food that can promote the biosynthesis of nerve growth factor and effectively combat dementia. Lion’s mane mushroom promotes the biosynthesis of nerve growth factor

A study published in Mycology finds that Lion’s mane mushroom (Hericium erinaceus) synthesizes two very important compounds for nerve growth – Hericenones and erinacines. These compounds are derived from the fruiting body and mycelium of the mushroom. Both compounds promote the biosynthesis of nerve growth factor (NGF) and therefore have value in the prevention and treatment of dementia.

Scientists have isolated two erinacine derivatives and two erinacine diterpenoids (Cyatha-3 and 12-diene with isomer) that promote NGF. Scientists have also demonstrated NGF-stimulating activity from three other compounds in Lion’s mane – Hericenones C, D and E. One of the compounds, 3-Hydroxyhericenone F, showed protective activity against endoplasmic reticulum stress-dependent Neuro2a cell death.

Two other species of the mushroom contained several compounds that promote nerve growth factor. Sarcodon scabrosus (A-F) and Sarcodon cyrneus (A-I, P, Q, J, R, K) all show promise for prevention and treatment of cognitive decline.

Interestingly, both the Hericenones and erinacines are low-molecular weight compounds that cross the blood-brain barrier with ease. The lion’s mane mushroom was designed at the molecular level to positively affect the brain and heal the nervous system, promoting peripheral nerve regeneration and advancing learning abilities into old age. High blood sugar levels harden arteries, increasing risk of dementia

While there are ways to reverse cognitive decline through medicinal foods, the prevention of dementia should always be approached through a holistic perspective . When treating dementia, it’s equally important to eliminate the chemicals that are promoting cognitive decline. High blood sugar levels are known to harden the arteries, increasing the risk of blockages in the brain. Obstruction of blood flow to the brain can inhibit blood supply to the nerve cells, resulting in impaired brain function.

A study published in the Nutrition Journal found an association between regular consumption of sugary beverages and dementia risk. The study found that free sugars in beverages can increase dementia risk by upwards of 39 percent. The study included a dietary analysis from 186,622 participants from the UK Biobank cohort. The analysis spanned 206 types of food and 32 types of beverages consumed over the course of 10.6 years. The analysis found a correlation between fructose, glucose and sucrose (table sugar) and dementia risk. The free sugars in soda, fruit drinks and milk-based drinks were strongly related to dementia risk, while the sugars in tea and coffee showed minimal risk.

Herbal teas – including but not limited to: green tea, chamomile, lavender and lemon balm – are all wonderful alternatives to sugar-laden drinks. These beverages, when sweetened with plant-based stevia extract, also provide the body with antioxidants, polyphenols and theaflavins that fight free radicals and therefore protect the brain.

Dementia doesn’t have to plague the population and dumb down the people who are running our government and institutions. Advanced learning can continue into old age. Herbal teas can replace sugary beverages in the diet, thus protecting the brain. Medicinal foods like lion’s mane mushroom can heal damaged neurons while promoting new neuron growth.

Sources include:

Who.int

TandFOnline.com

FungalBiotech.org [PDF]

NCBI.NLM.NIH.gov

This Graphene-Based Brain Implant Can Peer Deep Into the Brain From Its Surface

Finding ways to reduce the invasiveness of brain implants could greatly expand their potential applications. A new device tested in mice that sits on the brain’s surface—but can still read activity deep within—could lead to safer and more effective ways to read neural activity.

There are already a variety of technologies that allow us to peer into the inner workings of the brain, but they all come with limitations. Minimally invasive approaches include functional MRI , where an MRI scanner is used to image changes of blood flow in the brain, and EEG , where electrodes placed on the scalp are used to pick up the brain’s electrical signals.

The former requires the patient to sit in an MRI machine though, and the latter is too imprecise for most applications. The gold standard approach involves inserting electrodes deep into brain tissue to obtain the highest quality readouts. But this requires a risky surgical procedure, and scarring and the inevitable shifting of the electrodes can lead to the signal degrading over time.

Another approach involves laying electrodes on the surface of the brain, which is less risky than deep brain implants but provides greater accuracy than non-invasive approaches. But typically, these devices can only read activity from neurons in the outer layers of the brain.

Now, researchers have developed a thin, transparent surface implant with electrodes made from graphene that can read neural activity deep in the brain. The approach relies on machine learning to uncover relationships between signals in outer layers and those far below the surface.

“We are expanding the spatial reach of neural recordings with this technology,” Duygu Kuzum, a professor at UC San Diego who led the research, said in a press release . “Even though our implant resides on the brain’s surface, its design goes beyond the limits of physical sensing in that it can infer neural activity from deeper layers.”

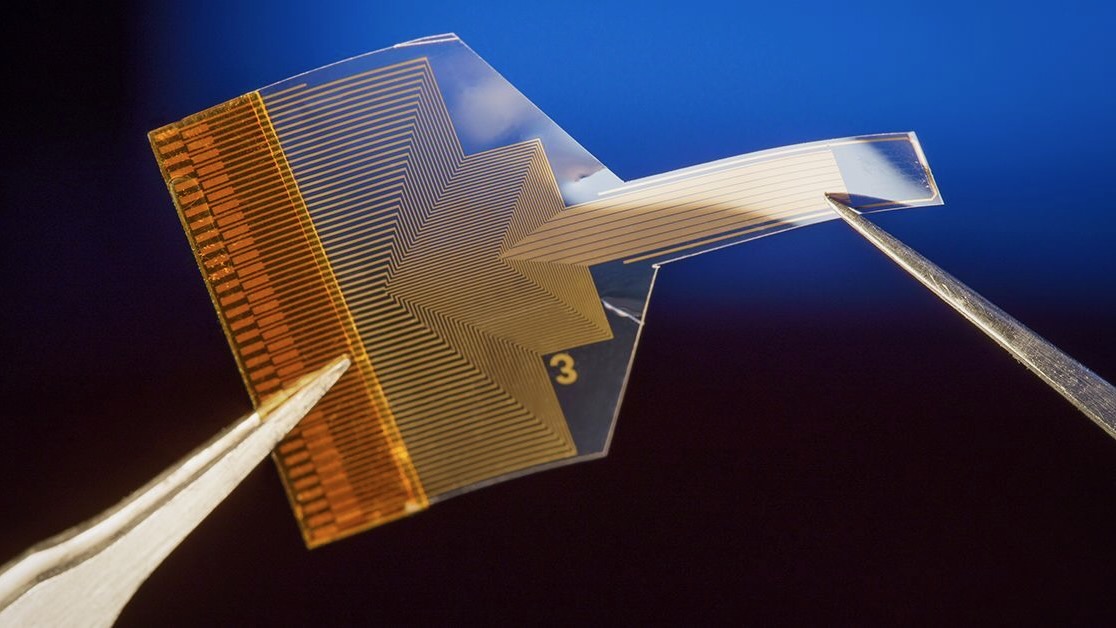

The device itself is made from a thin polymer strip embedded with a dense array of tiny graphene electrodes just 20 micrometers across and connected by ultra-thin graphene wires to a circuit board. Shrinking graphene electrodes to this size is a considerable challenge, say the authors, as it raises their impedance and makes them less sensitive. They got around this by using a bespoke fabrication technique to deposit platinum particles onto the electrodes to boost electron flow.

Crucially, both the electrodes and the polymer strip are transparent. When the team implanted the device in mice, the researchers were able to shine laser light through the implant to image cells deeper in the animals’ brains. This made it possible to simultaneously record electrically from the surface and optically from deeper brain regions.

In these recordings, the team discovered a correlation between the activity in the outer layers and inner ones. So, they decided to see if they could use machine learning to predict one from the other. They trained an artificial neural network on the two data streams and discovered it could predict the activity of calcium ions—an indicator of neural activity—in populations of neurons and single cells in deeper regions of the brain.

Using optical approaches to measure brain activity is a powerful technique, but it requires the subject’s head to be fixed under a microscope and for the skull to remain open, making it impractical for reading signals in realistic situations. Being able to predict the same information based solely on surface electrical readings would greatly expand the practicality.

“Our technology makes it possible to conduct longer duration experiments in which the subject is free to move around and perform complex behavioral tasks,” said Mehrdad Ramezani, co-first author of a paper in Nature Nanotechnology on the research. “This can provide a more comprehensive understanding of neural activity in dynamic, real-world scenarios.”

The technology is still a long way from use in humans though. At present, the team has only demonstrated the ability to learn correlations between optical and electrical signals recorded in individual mice. It’s unlikely this model could be used to predict deep brain activity from surface signals in a different mouse, let alone a person.

That means all individuals would have to undergo the fairly invasive data collection process before the approach would work. The authors admit more needs to be done to find higher level connections between the optical and electrical data that would allow models to generalize across individuals.

But given rapid improvements in the technology required to carry out both optical and electrical readings from the brain, it might not be long until the approach becomes more feasible. And it could ultimately strike a better balance between fidelity and invasiveness than competing technologies.

Image Credit: A thin, transparent, flexible brain implant sits on the surface of the brain to avoid damaging it, but with the help of AI, it can still infer activity deep below the surface. David Baillot/UC San Diego Jacobs School of Engineering Tags

Neuroscience

Science

Study challenges traditional views on how the brain processes movement and sensation

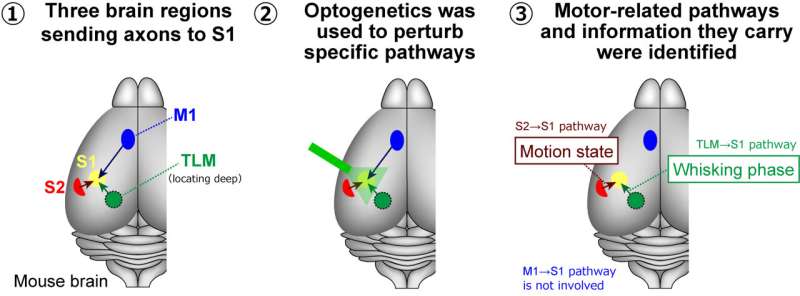

Utilizing optogenetics, researchers at Fujita Health University have selectively deactivated neural pathways with light, revealing new insights into how sensory and motor areas of the mouse brain communicate during movement. Credit: Takayuki Yamashita from Fujita Health University Our body movements profoundly impact how our brain processes sensory information. Historically, it was believed that the brain’s primary motor cortex played a key role in modulating sensory experiences during movement. However, a new study led by researchers from Fujita Health University has challenged this view.

By selectively inhibiting different neural pathways in the mouse brain, they discovered that regions beyond the primary motor cortex significantly influence the primary sensory cortex during movement.

The brain is widely considered the most complex organ in the human body. The intricate mechanisms through which it processes sensory information and how this information affects and is affected by motor control have captivated neuroscientists for more than a century. Today, thanks to advanced laboratory tools and techniques, researchers can use animal models to solve this puzzle, especially in the mouse brain.

During the 20th century, experiments with anesthetized mice proved that sensory inputs primarily define neuronal activity in the primary sensory cortices—the brain regions processing sensory information, including touch, vision, and audition.

However, over the past few decades, studies involving awake mice revealed that spontaneous behavior, such as exploratory motion and movement of the whiskers called whisking, actually regulates the activity of the sensory responses in the primary sensory cortices. In other words, sensations at the neuronal level appear substantially modulated by body movements, even though the corresponding neuronal circuits and the underlying mechanisms are not fully understood.

To address this knowledge gap, a research team from Japan investigated the primary somatosensory barrel cortex (S1)—a region of the mouse brain that handles tactile input from the whiskers. Their latest study, published in The Journal of Neuroscience was conducted by Professor Takayuki Yamashita from Fujita Health University (FHU) and Dr. Masahiro Kawatani, affiliated with FHU and Nagoya University, along with their team.

The S1 region receives input through the axons from several other areas, including the secondary somatosensory cortex (S2), the primary motor cortex (M1), and the sensory thalamus (TLM).

To investigate how these regions modulate activity in S1, the researchers turned to optogenetics (a technique for controlling activities of specific neuronal populations by light) involving eOPN3, which is a recently discovered light-sensitive protein enabling effective inhibition of specific neural pathways in response to light. Using viruses as a vector, they introduced the gene coding for this protein into the M1, S2, and TLM regions in mice.

Then, they measured neural activity in S1 in awake mice performing spontaneous whisking. During this process, they selectively inhibited different signal inputs going to S1 using light as an ON/OFF switch and observed the effect at S1.

Interestingly, only signal inputs from S2 and TLM to S1, not from M1 to S1, modulated neuronal activity in S1 during spontaneous whisking. Specifically, the pathway from S2 to S1 seems to convey information about the motion state of the whiskers.

Additionally, the TLM-to-S1 pathway appeared to relay information related to the phase of spontaneous whisking, which follows a repetitive and rhythmic pattern.

These results challenge the established view that neuronal activity in sensory cortices is modulated primarily by motor cortices during movement; as Prof. Yamashita remarks, “Our findings provoke a reconsideration of the role of motor-sensory projections in sensorimotor integration and bring to light a new function for S2-to-S1 projections.”

A better understanding of how distinct brain regions modulate activities among each other in response to movement could lead to progress in myriad applied fields. These research insights have far-reaching implications, potentially revolutionizing fields like artificial intelligence (AI), prosthetics, and brain-computer interfaces.

“Understanding these neural mechanisms could greatly enhance the development of AI systems that mimic human sensory-motor integration and aid in creating more intuitive prosthetics and interfaces for those with disabilities,” Prof. Yamashita adds.

In summary, this study sheds light on the intricate workings of the brain. It also paves the way for researching the connection between body motion and sensory perception. As we continue to explore brain-related enigmas, studies like this offer vital clues in our quest to understand the most complex organ in the human body.

Provided by Fujita Health University

Good or bad? High levels of HDL or “good” cholesterol may be linked to dementia, reveals study

Advertisement

Earlier studies have suggested that high-density lipoprotein (HDL) cholesterol is associated with several health benefits, such as promoting heart health. But, according to a recent study, high levels of HDL cholesterol could be linked to a greater risk of dementia .

The study, which was led by Monash University in Melbourne, involved thousands of volunteers from Australia and the United States. The study ran for more than six years and the findings were published in the journal Lancet Regional Health Western Pacific.

Cholesterol is a lipid or a waxy type of fat. It is produced by the liver, but people can also receive cholesterol by consuming it from food products from animals, and the ingested cholesterol is then transported throughout the body by blood.

The body needs cholesterol to : Help form cell membrane layers

Help the liver make bile for digestion

Produce certain hormones

Produce vitamin D

There are two primary types of cholesterol: High-density lipoprotein (HDL) and low-density lipoprotein (LDL).

Very low-density lipoproteins are a third type of cholesterol that carry triglycerides or the fat the body stores up and uses for energy between meals.

LDL is called bad cholesterol because it can build up on the walls of blood vessels, which then narrow the passageways. If a blood clot forms and gets stuck in one of these narrowed passageways, it can trigger a heart attack or a stroke .

HDL is often called good cholesterol because it absorbs other types of cholesterol and carries them away from arteries and back to the liver, which then eliminates it from the body. Additionally, HDL cholesterol is called “good” cholesterol because it can remove other types of cholesterol from the bloodstream. HDL: Good or bad for overall health?

While conducting the study, scientists recorded 850 incidences of dementia in 18,668 participants. The research team discovered that volunteers with high HDL cholesterol of more than 80 milligrams per deciliter had a 27 percent greater risk of dementia.

They also reported that there was a 42 percent increase in dementia risk for volunteers older than 75.

High HDL cholesterol was linked to an increased risk of all-cause dementia in both middle-aged and older individuals.

“The association appears strongest in those 75 years and above,” explained the researchers.

Even considering different variables such as country, age, sex, daily exercise, education, alcohol consumption and weight change over time, the researchers advised that the link between high HDL cholesterol and dementia remained “significant.” (Related: Study: Life’s Essential 8 could help you live healthier longer .)

Monira Hussain, a senior research fellow at Monash University’s School of Public Health and Preventive Medicine, said the study findings can be used to help improve understanding of dementia, but more research is needed.

Hussain said while it has been confirmed that HDL cholesterol has a crucial role in cardiovascular health , the study suggests that continued research can help shed more light on the “role of very high HDL cholesterol in the context of brain health.”

Hussain added that it is worth considering very high HDL cholesterol levels in prediction algorithms for dementia risk. Researchers recruited a larger cohort for the HDL/dementia risk study

For the study, the research team recruited 16,703 Australians older than 70 years, along with 2,411 people older than 65 years from the United States.

The volunteers examined during the research had no physical disability, cardiovascular disease , dementia, or life-threatening illness at the time of recruitment for the study. They were also “cognitively healthy.”

According to the scientists, while several studies have indicated a connection between HDL cholesterol and adverse health events, evidence regarding its connection to dementia remains unknown.

The researchers said an analysis of a medical database examining the link between high HDL cholesterol and dementia revealed that there was only one study from Denmark that established a link.

“Only one study of cohorts from Denmark was identified which suggested that high HDL-C is associated with dementia in people aged 47–68 years,” said the scientists.

Because early-onset dementia may have a different pathophysiology than late-onset dementia, the researchers advised that it is important to extend these results to well-characterized prospective studies of older individuals who are cognitively intact at the study onset.The data analysis was conducted between October 2022 and January 2023, before publishing the study.The scientists said their study is the “most comprehensive study” to report high HDL cholesterol and the risk of dementia in the elderly .Watch the video below as Dr. Zoe Harcombe dispels myths about fiber, fat and cholesterol . What should we be eating? Dispelling myths about fiber, fat, and cholesterol with Dr. Zoe Harcombe.This is a modal window.No compatible source was found for this media.This video is from the DC Learning to Live channel on Brighteon.com . More related stories: Experts say LDL cholesterol may not be as bad as previously believed . Study: Older adults who regularly use the internet have LESS DEMENTIA RISK than non-regular users . Study: Microplastics accumulate in the brain and cause behavioral changes associated with dementia . Sources include: TheEpochTimes.com Healthline.com Brighteon.com

New Study Finds the Best Brain Exercises to Boost Memory

New Study Finds the Best Brain Exercises to Boost Memory Research has found exercise can have a positive impact on your memory and brain health.

A new study linked vigorous exercise to improved memory, planning, and organization.

Data suggests just 10 minutes a day can have a big impact.

Experts have known for years about the physical benefits of exercise, but research has been ongoing into how working out can impact your mind. Now, a new study reveals the best exercise for brain health—and it can help sharpen everything from your memory to your ability to get organized.

The study, which was published in the Journal of Epidemiology & Community Health , tracked data from nearly 4,500 people in the UK who had activity monitors strapped to their thighs for 24 hours a day over the course of a week. Researchers analyzed how their activity levels impacted their short-term memory, problem-solving skills, and ability to process things.

The study found that doing moderate and vigorous exercise and activities—even those that were done in under 10 minutes—were linked to much higher cognition scores than people who spent most of their time sitting, sleeping, or doing gentle activities. ( Vigorous exercise generally includes things like running, swimming, biking up an incline, and dancing; moderate exercise includes brisk walking and anything that gets your heart beating faster.)

The researchers specifically found that people who did these workouts had better working memory (the small amount of information that can be held in your mind and used in the execution of cognitive tasks) and that the biggest impact was on executive processes like planning and organization.

On the flip side: People who spent more time sleeping, sitting, or only moved a little in place of doing moderate to vigorous exercise had a 1% to 2% drop in cognition.

“Efforts should be made to preserve moderate and vigorous physical activity time, or reinforce it in place of other behaviors,” the researchers wrote in the conclusion.

But the study wasn’t perfect—it used previously collected cohort data, so the researchers didn’t know extensive details of the participants’ health or their long-term cognitive health. The findings “may simply be that those individuals who move more tend to have higher cognition on average,” says lead study author John Mitchell, a doctoral training student in the Institute of Sport, Exercise & Health at University College London. But, he adds, the findings could also “imply that even minimal changes to our daily lives can have downstream consequences for our cognition.”

So, why might there be a link between exercise and a good memory? Here’s what you need to know. Why might exercise sharpen your memory and thinking?

This isn’t the first study to find a link between exercise and enhanced cognition. In fact, the Centers for Disease Control and Prevention (CDC) specifically states online that physical activity can help improve your cognitive health, improving memory, emotional balance, and problem-solving.

Working out regularly can also lower your risk of cognitive decline and dementia. One scientific analysis of 128,925 people published in the journal Preventive Medicine in 2020 found that cognitive decline is almost twice as likely in adults who are inactive vs. their more active counterparts.

But, the “why” behind it all is “not entirely clear,” says Ryan Glatt, C.P.T. , senior brain health coach and director of the FitBrain Program at Pacific Neuroscience Institute in Santa Monica, CA. However, Glatt says, previous research suggests that “it is possible that different levels of activity may affect brain blood flow and cognition.” Meaning, exercising at a harder clip can stimulate blood flow to your brain and enhance your ability to think well in the process.

“It could relate to a variety of factors related to brain growth and skeletal muscle,” says Steven K. Malin, Ph.D., associate professor in the Department of Kinesiology and Health at Rutgers Robert Wood Johnson Medical School. “Often, studies show the more aerobically fit individuals are, the more dense brain tissue is, suggesting better connectivity of tissue and health.”

Exercise also activates skeletal muscles (the muscles that connect to your bones) that are thought to release hormones that communicate with your brain to influence the health and function of your neurons, i.e. cells that act as information messengers, Malin says. “This could, in turn, promote growth and regeneration of brain cells that assist with memory and cognition,” he says.

Currently, the CDC recommends that most adults get at least 150 minutes a week of moderate-intensity exercise. The best exercises for your memory

Overall, the CDC suggests doing the following to squeeze more exercise into your life to enhance your brain health: Dance

Do squats or march in place while watching TV

Start a walking routine

Use the stairs

Walk your dog, if you have one ( one study found that dog owners walk, on average, 22 minutes more every day than people who don’t own dogs)

However, the latest study suggests that more vigorous activities are really what’s best for your brain. The study didn’t pinpoint which exercises, in particular, are best—“when wearing an accelerometer, we do not know what sorts of activities individuals are doing,” Glatt points out. However, getting your heart rate up is key.

That can include doing exercises like: HIIT workouts

Running

Jogging Swimming Biking on an incline Dancing Malin’s advice: “Take breaks in sitting throughout the day by doing activity ‘snacks.’” That could mean doing a minute or two of jumping jacks, climbing stairs at a brisk pace, or doing air squats or push-ups to try to replace about six to 10 minutes of sedentary behavior a day. “Alternatively, trying to get walks in for about 10 minutes could go a long way,” he says. You Might Also Like

Scientists link growth hormone to anxiety and fear memory through specific neuron group

Growth hormone (GH) acts on many tissues throughout the body, helping build bones and muscles, among other functions. It is also a powerful anxiolytic. A study conducted by researchers at the University of São Paulo (USP) in Brazil has produced a deeper understanding of the role of GH in mitigating anxiety and, for the first time, identified the population of neurons responsible for modulating the influence of GH on the development of neuropsychiatric disorders involving anxiety, depression and post-traumatic stress. An article on the study is published in the Journal of Neuroscience .

In the study, which was supported by FAPESP, the researchers found that male mice lacking the GH receptor in a group of somatostatin-expressing neurons displayed increased anxiety. Somatostatin is a peptide that regulates several physiological processes, including the release of GH and other hormones, such as insulin.

On the other hand, they also found that the absence of the GH receptor in somatostatin-expressing neurons decreased fear memory, a key feature of post-traumatic stress disorder, in males and females. The discovery could contribute to the future development of novel classes of anxiolytic drug. Our discovery of the mechanism involving anxiolytic effects of GH offers a possible, merely chemical, explanation for these disorders, suggesting why patients with more or less GH secretion are more or less susceptible to them.” José Donato Júnior, last author of the article and professor at the university’s Biomedical Sciences Institute (ICB-USP) In the study, the researchers conducted three types of experiment involving mice (open field, elevated plus maze, and light/dark box) to test the animals’ capacity to explore the environment and take risks. “These are well-established experiments to analyze behavior similar to anxiety and memory of fear, which is an element in post-traumatic stress. As a result, we were able to explore the role of GH in these animals,” Donato explained.

The results of the study did not point to any reasons for the lack of increased anxiety-like behavior in female mice. “We believe it may be related to sexual dimorphism. We know the brain region containing the neurons we studied is a bit different in males and females. Some neurological disorders are also different in men and women, probably not by chance,” he said. Chemistry

Thousands of people suffer from neuropsychiatric disorders all over the world. Although anxiety and depression are the most common, their causes have yet to be fully elucidated. Scientists think they may be due to multiple factors, such as stress, genetics, social pressures, economic difficulties and/or gender issues, among others.

There is also growing evidence that hormones play an important role in regulating various neurological processes and influencing susceptibility to neuropsychiatric disorders. Alterations in levels of sex hormones such as estradiol affect anxiety, depression and fear memory in rodents and humans, for example. Preliminary results of other studies suggest that glucocorticoids (steroid hormones such as cortisol as well as synthetic forms such as prednisone and dexamethasone) may be involved in the development of neuropsychiatric disorders.

In the case of GH, the regulatory mechanism in neurons associated with such disorders had not yet been discovered. “We demonstrated that GH changes the synapses and structurally alters the neurons that secrete somatostatin,” Donato said.

The study also showed that anxiety, post-traumatic stress and fear memory are different facets of the same neuronal circuit. According to Donato, anxiety can be defined as excessive fear or distrust, while fear memory relates to an adverse past event that produced a brain alteration, which triggers an exacerbated response whenever the subject is exposed to a similar stimulus. This response may range from weeping to tremors and even paralysis.

“All this happens in the same neuron population, which expresses the GH receptor. In our experiment, fear memory was reduced in mice when we switched the GH receptor off. This means the capacity to form fear memory is impaired. It may be the case that GH contributes to the development of post-traumatic stress,” he said.

Another type of evidence for this is that chronic stress raises the level of a hormone called ghrelin, a powerful trigger of GH secretion. “The role of ghrelin in post-traumatic stress has been studied for some time. Research has shown that ghrelin-induced GH secretion increases in chronic stress, favoring the development of fear memory and post-traumatic stress in the animal’s brain,” he said. How GH affects neurological disorders

In humans, GH is secreted by the pituitary gland into the bloodstream, promoting tissue growth throughout the body by means of protein formation, cell multiplication and cell differentiation. GH is indispensable during childhood, adolescence and pregnancy, when its secretion peaks. In old age, it naturally declines.

GH deficiency can lead to dwarfism, which is mostly manifested from 2 years of age, preventing growth during childhood and adolescence. “Previous research involving patients with GH deficiency showed a higher prevalence of anxiety and depression in these individuals, but the cause hadn’t yet been established. Some authors blame it on problems of self-image and bullying due to short stature,” Donato said.

The study involving mice demonstrated the key role played by GH in these disorders without the presence of potential confounders such as body image issues. “We were able to find out how much is due directly to the effects of GH or the indirect effects of growth deficit. Because we were able to identify the mechanism involving GH, we know it’s a direct cause of anxiety disorder, and this knowledge can facilitate the development of therapies,” Donato said.

Next steps for the research group include an investigation of the role played by GH during pregnancy. “We know one of the peaks in GH production occurs during pregnancy. We also know that the prevalence of depression rises in this period owing to post-partum depression. Of course, these disorders also reflect social, economic and other kinds of pressure, but we mustn’t forget that the rise in hormone secretion during and after pregnancy can dysregulate brain functioning, also leading to this kind of mental illness,” he said.

Source:

São Paulo Research Foundation (FAPESP)

Journal reference:

dos Santos, W. O., et al. (2023). […]

Exploring fear engrams to shed light on physical manifestation of memory in the brain

In a world grappling with the complexities of mental health conditions like anxiety, depression, and PTSD, new research from Boston University neuroscientist Dr. Steve Ramirez and collaborators offers a unique perspective. The study, recently published in the Journal of Neuroscience , delves into the intricate relationship between fear memories, brain function, and behavioral responses. Dr. Ramirez, along with his co-authors Kaitlyn Dorst, Ryan Senne, Anh Diep, Antje de Boer, Rebecca Suthard, Heloise Leblanc, Evan Ruesch, Sara Skelton, Olivia McKissick, and John Bladon, explore the elusive concept of fear engrams, shedding light on the physical manifestation of memory in the brain. As Ramirez emphasizes, the initiative was led by Dorst and Senne, with the project serving as the cornerstone of Dorst’s PhD.

Beyond its implications for neuroscience, their research marks significant strides in understanding memory formation and holds promise for advancing our comprehension of various behavioral responses in different situations, with potential applications in the realm of mental health. In this Q&A, Dr. Ramirez discusses the motivations, challenges, and key findings of the study. What motivated you and your research collaborators to study the influence of fear memories on behavior in different environments?

The first thing is that with fear memories, it’s one of the most, if not the most, most studied kind of memory in rodents. It’s something that gives us a quantitative, measurable behavioral readout. So when an animal’s in a fearful state, we can begin looking at how its behavior has changed and mark those changes in behavior as like an index of fear. Fear memories in particular are our point because they lead to some stereotyped behaviors in animals such as freezing in place, which is one of many ways that fear manifests behaviorally in rodents..

So that’s one angle. The second angle being that fear is such a core component of a variety of pathological states in the brain. So including probably especially PTSD, but also including generalized anxiety, for instance, and even certain components of depression for that matter. So there’s a very direct link between a fear memory and its capacity to evolve or devolve in a sense into a pathological state such as PTSD. It gives us a window into what’s going on in those instances as well. We studied fear because we can measure it predictably in rodents, and it has direct translational relevance in disorders involving dysregulated fear responses as well. Can you explain what fear engrams are and how you used optogenetics to reactivate them in the hippocampus?

An engram is this elusive term that generally means the physical manifestation of memory. So, whatever memory’s physical identity is in the brain, that’s what we term an engram. The overall architecture in the brain that supports the building that is memory. I say elusive because we don’t really know what memory fully looks like in the brain. And we definitely don’t know what an engram looks like. But, we do have tips of the iceberg kind of hints that for the past decade, we’ve been able to really use a lot of cutting edge tools in neuroscience to study.

In our lab, we’ve made a lot of headway in visualizing the physical substrates of memories in the brain. For instance, we know that there’s cells throughout the brain. It’s a 3D phenomenon distributed throughout the brain but there’s cells throughout the brain that are involved in the formation of a given memory such as a fear memory and that there’s areas of the brain that are particularly active during the formation of a memory. What were the main findings about freezing behavior in smaller versus larger environments during fear memory reactivation?

It’s thankfully straightforward and science is often anything but. First, if we reactivate this fear memory when the animals are in a small environment, then they’ll default to freezing–they stay in place. This is presumably an adaptive response so as to avoid detection by a potential threat. We think the brain has done the calculus of, can I escape this environment? Perhaps not. Let me sit in a corner and be vigilant and try to detect any potential threats. Thus, the behavior manifests as freezing.

The neat part is that in that same animal, if we reactivate the exact same cells that led to freezing in the small environment, everything is the exact same: the cells that we’re activating, the fear memory that it corresponds to, the works. But, if we do that in a large environment, then it all goes away. The animals don’t freeze anymore. If anything, a different repertoire of behaviors emerge. Basically, they start doing other things that is just not freezing, and that was the initial take home for us, was that they, when we reactivate the fear memory up, or artificially, when we do that in the small environment, they freeze, when we do that in the large environment, they don’t freeze.

What was cool for us about that finding in particular was that it means that these fear memory cells are not hardwired to produce the same exact response every single time they’re reactivated. At some point, the brain determines, “I’m recalling a fear memory and now I have to figure out what’s the most adaptive response.” Were there any challenges or obstacles you encountered during the research process, and how did you overcome them?

There’s a couple. The first is that the behavior, ironically enough, was reasonably straightforward for us to reproduce and to do again and again and again–so that we were convinced that there was some element of truth there. In the second half of the study, and the one that probably takes up the most space in the paper, was figuring out what in the brain is mediating this difference. As we observed, the animals are freezing when we artificially activate a memory in a small environment, and they’re not freezing in the large environment. But, we’re activating the same cells. So, what is different about the animal’s brain […]

Think you’re good at multi-tasking? Here’s how your brain compensates – and how this changes with age

This means better capacity to maintain performance at or near single-task levels.The white matter tract that connects our two hemispheres the corpus callosum also takes a long time to fully mature, placing limits on how well children can walk around and do manual tasks like texting on a phone together.For a child or adult with motor skill difficulties, or developmental coordination disorder, multi-tastking errors are more common.

Representative Image Country:

Australia

SHARE

We’re all time-poor, so multi-tasking is seen as a necessity of modern living. We answer work emails while watching TV, make shopping lists in meetings and listen to podcasts when doing the dishes. We attempt to split our attention countless times a day when juggling both mundane and important tasks.

But doing two things at the same time isn’t always as productive or safe as focusing on one thing at a time.

The dilemma with multi-tasking is that when tasks become complex or energy-demanding, like driving a car while talking on the phone, our performance often drops on one or both.

Here’s why – and how our ability to multi-task changes as we age.

Doing more things, but less effectively The issue with multi-tasking at a brain level, is that two tasks performed at the same time often compete for common neural pathways – like two intersecting streams of traffic on a road.

In particular, the brain’s planning centres in the frontal cortex (and connections to parieto-cerebellar system, among others) are needed for both motor and cognitive tasks. The more tasks rely on the same sensory system, like vision, the greater the interference.

This is why multi-tasking, such as talking on the phone, while driving can be risky. It takes longer to react to critical events, such as a car braking suddenly, and you have a higher risk of missing critical signals, such as a red light.

The more involved the phone conversation, the higher the accident risk, even when talking “hands-free”.

Generally, the more skilled you are on a primary motor task, the better able you are to juggle another task at the same time. Skilled surgeons, for example, can multitask more effectively than residents, which is reassuring in a busy operating suite.

Highly automated skills and efficient brain processes mean greater flexibility when multi-tasking.

Adults are better at multi-tasking than kids Both brain capacity and experience endow adults with a greater capacity for multi-tasking compared with children.

You may have noticed that when you start thinking about a problem, you walk more slowly, and sometimes to a standstill if deep in thought. The ability to walk and think at the same time gets better over childhood and adolescence, as do other types of multi-tasking.

When children do these two things at once, their walking speed and smoothness both wane, particularly when also doing a memory task (like recalling a sequence of numbers), verbal fluency task (like naming animals) or a fine-motor task (like buttoning up a shirt). Alternately, outside the lab, the cognitive task might fall by wayside as the motor goal takes precedence.

Brain maturation has a lot to do with these age differences. A larger prefrontal cortex helps share cognitive resources between tasks, thereby reducing the costs. This means better capacity to maintain performance at or near single-task levels.

The white matter tract that connects our two hemispheres (the corpus callosum) also takes a long time to fully mature, placing limits on how well children can walk around and do manual tasks (like texting on a phone) together.

For a child or adult with motor skill difficulties, or developmental coordination disorder, multi-tastking errors are more common. Simply standing still while solving a visual task (like judging which of two lines is longer) is hard. When walking, it takes much longer to complete a path if it also involves cognitive effort along the way. So you can imagine how difficult walking to school could be.

What about as we approach older age? Older adults are more prone to multi-tasking errors. When walking, for example, adding another task generally means older adults walk much slower and with less fluid movement than younger adults.

These age differences are even more pronounced when obstacles must be avoided or the path is winding or uneven.

Older adults tend to enlist more of their prefrontal cortex when walking and, especially, when multi-tasking. This creates more interference when the same brain networks are also enlisted to perform a cognitive task.

These age differences in performance of multi-tasking might be more “compensatory” than anything else, allowing older adults more time and safety when negotiating events around them.

Older people can practise and improve Testing multi-tasking capabilities can tell clinicians about an older patient’s risk of future falls better than an assessment of walking alone, even for healthy people living in the community.

Testing can be as simple as asking someone to walk a path while either mentally subtracting by sevens, carrying a cup and saucer, or balancing a ball on a tray.Patients can then practise and improve these abilities by, for example, pedalling an exercise bike or walking on a treadmill while composing a poem, making a shopping list, or playing a word game.The goal is for patients to be able to divide their attention more efficiently across two tasks and to ignore distractions, improving speed and balance.There are times when we do think better when moving Let’s not forget that a good walk can help unclutter our mind and promote creative thought. And, some research shows walking can improve our ability to search and respond to visual events in the environment.But often, it’s better to focus on one thing at a time We often overlook the emotional and energy costs of multi-tasking when time-pressured. In many areas of life – home, work and school – we think it will save us time and energy. But the reality can be different.Multi-tasking can sometimes sap our reserves and create stress, raising our cortisol levels, especially when we’re time-pressured. If such performance is sustained over long periods, it can leave you feeling fatigued or just plain empty.Deep thinking is energy demanding by itself and […]

Mind Control Breakthrough: Caltech’s Pioneering Ultrasound Brain–Machine Interface

The latest advancements in Brain-Machine Interfaces feature functional ultrasound (fUS), a non-invasive technique for reading brain activity. This innovation has shown promising results in controlling devices with minimal delay and without the need for frequent recalibration. Credit: SciTechDaily.com Functional ultrasound (fUS) marks a significant leap in Brain-Machine Interface technology, offering a less invasive method for precise control of electronic devices by interpreting brain activity.

Brain–machine interfaces (BMIs) are devices that can read brain activity and translate that activity to control an electronic device like a prosthetic arm or computer cursor. They promise to enable people with paralysis to move prosthetic devices with their thoughts.

Many BMIs require invasive surgeries to implant electrodes into the brain in order to read neural activity. However, in 2021 , Caltech researchers developed a way to read brain activity using functional ultrasound (fUS), a much less invasive technique. Functional Ultrasound: A Game Changer for BMIs

Now, a new study is a proof-of-concept that fUS technology can be the basis for an “online” BMI—one that reads brain activity, deciphers its meaning with decoders programmed with machine learning, and consequently controls a computer that can accurately predict movement with very minimal delay time. Ultrasound is used to image two-dimensional sheets of the brain, which can then be stacked together to create a 3-D image. Credit: Courtesy of W. Griggs The study was conducted in the Caltech laboratories of Richard Andersen, James G. Boswell Professor of Neuroscience and director and leadership chair of the T&C Chen Brain–Machine Interface Center ; and Mikhail Shapiro, Max Delbrück Professor of Chemical Engineering and Medical Engineering and Howard Hughes Medical Institute Investigator. The work was a collaboration with the laboratory of Mickael Tanter, director of physics for medicine at INSERM in Paris, France. Advantages of Functional Ultrasound

“Functional ultrasound is a completely new modality to add to the toolbox of brain–machine interfaces that can assist people with paralysis,” says Andersen. “It offers attractive options of being less invasive than brain implants and does not require constant recalibration. This technology was developed as a truly collaborative effort that could not be accomplished by one lab alone.”

“In general, all tools for measuring brain activity have benefits and drawbacks,” says Sumner Norman, former senior postdoctoral scholar research associate at Caltech and a co-first author on the study. “While electrodes can very precisely measure the activity of single neurons, they require implantation into the brain itself and are difficult to scale to more than a few small brain regions. Non-invasive techniques also come with tradeoffs. Functional magnetic resonance imaging [fMRI] provides whole-brain access but is restricted by limited sensitivity and resolution. Portable methods, like electroencephalography [EEG] are hampered by poor signal quality and an inability to localize deep brain function.” The vasculature of the posterior parietal cortex as measured by functional ultrasound neuroimaging. Credit: Courtesy of W. Griggs Ultrasound Imaging Explained

Ultrasound imaging works by emitting pulses of high-frequency sound and measuring how those sound vibrations echo throughout a substance, such as various tissues of the human body. Sound waves travel at different speeds through these tissue types and reflect at the boundaries between them. This technique is commonly used to take images of a fetus in utero , and for other diagnostic imaging.

Because the skull itself is not permeable to sound waves, using ultrasound for brain imaging requires a transparent “window” to be installed into the skull. “Importantly, ultrasound technology does not need to be implanted into the brain itself,” says Whitney Griggs (PhD ’23), a co-first author on the study. “This significantly reduces the chance for infection and leaves the brain tissue and its protective dura perfectly intact.”

“As neurons’ activity changes, so does their use of metabolic resources like oxygen,” says Norman. “Those resources are resupplied through the blood stream, which is the key to functional ultrasound.” In this study, the researchers used ultrasound to measure changes in blood flow to specific brain regions. In the same way that the sound of an ambulance siren changes in pitch as it moves closer and then farther away from you, red blood cells will increase the pitch of the reflected ultrasound waves as they approach the source and decrease the pitch as they flow away. Measuring this Doppler-effect phenomenon allowed the researchers to record tiny changes in the brain’s blood flow down to spatial regions just 100 micrometers wide, about the width of a human hair. This enabled them to simultaneously measure the activity of tiny neural populations, some as small as just 60 neurons, widely throughout the brain. Unlocking Movement: Helping Paralyzed People Use Thought to Control Computers and Robotic Limbs Innovative Application in Non-Human Primates

The researchers used functional ultrasound to measure brain activity from the posterior parietal cortex (PPC) of non-human primates, a region that governs the planning of movements and contributes to their execution. The region has been studied by the Andersen lab for decades using other techniques.

The animals were taught two tasks, requiring them to either plan to move their hand to direct a cursor on a screen, or plan to move their eyes to look at a specific part of the screen. They only needed to think about performing the task, not actually move their eyes or hands, as the BMI read the planning activity in their PPC.

“I remember how impressive it was when this kind of predictive decoding worked with electrodes two decades ago, and it’s amazing now to see it work with a much less invasive method like ultrasound,” says Shapiro. Promising Results and Future Plans

The ultrasound data was sent in real-time to a decoder (previously trained to decode the meaning of that data using machine learning), and subsequently generated control signals to move a cursor to where the animal intended it to go. The BMI was able to successfully do this to eight radial targets with mean errors of less than 40 degrees.

“It’s significant that the technique does not require the BMI to be recalibrated each day, unlike other BMIs,” says Griggs. “As an analogy, imagine […]

All Your Brain Wants For Christmas Are 12 Tools For Career Success

You have the most scientific and innovative tools yet to rewire your brain for success in 2024. What are you giving your brain for Christmas and the New Year? That might sound like a silly question, and you could be rolling your eyes, but I haven’t been sampling the Christmas cheer. Neuroscientists tell us that the brain needs certain things to perform at its best. After all, your brain is your best friend. It’s with you 24/7, and never leaves your side (or I should say your head). The truth of the matter is that you can’t have optimal career success if you ignore your sidekick. The good news is that as we approach the new year, modern neuroscience has developed imaging techniques to advance our understanding of how to nurture our brain for optimal success. So don’t be a Christmas Scrooge. Take advantage of the latest science-backed tools and give your brain the twelve best gifts of the holiday season. What Your Brain Wants Under The Tree

If your brain could speak, it would tell you that it likes and requires certain things to perform at its best. You can’t be successful without it, so it only makes sense to nourish it with the things it likes. Consider the following actions as tools that will give it what it needs for optimal performance.

-1. Green time to offset screen time. Brain scans of people who spend time outdoors have more gray matter in the prefrontal cortex and a stronger ability to think clearly and to self-regulate their emotions.

-2. Blood flow . Movement and physical exercise feeds your brain the excess blood flow it needs to slow the onset of memory loss and dementia.

-3. Safety. Your brain requires psychological safety to focus on work tasks. Under fear—such as an overbearing boss—your brain focuses on how to avoid the threat, distracting you from engaging and producing work tasks.

-4. Music. The brain loves music. Repeated listening to meaningful music cultivates beneficial brain plasticity improving memory and performance. Wearing earpods and listening to your favorite music while working enhances engagement and productivity.

MORE FOR YOU Your Working Career Will Span 25 Years Or More Why Adopting An Entrepreneurial Mindset Might Just Set You Free

9 Personal Branding Trends For 2024 Your Blueprint For Career Success

-5. Ample sleep. Your brain gets annoyed when it doesn’t get the rest it needs. But ample sleep restores clarity and performance by actively refining cortical plasticity to help you manage job stress.

CEO: C-suite news, analysis, and advice for top decision makers right to your inbox.

By signing up, you accept and agree to our Terms of Service (including the class action waiver and arbitration provisions), and Privacy Statement .

-6. Novelty. Your brain likes the creative mojo that comes from trying new things. Novelty promotes adaptive learning by resetting key brain circuits and enhances your ability to update new ideas and consolidate them into existing neurological frameworks.

-7. Social connections. People who volunteer, attend classes or get together with friends at least once a week have healthier brains in the form of more robust gray matter and less cognitive decline.

-8. Broad perspective. A broad perspective allows your brain to build on the positive aspects of your career and see future possibilities, so it wants you to keep the big picture in mind.

-9. Microbreaks. Short breaks of five minutes—when you meditate, deep breathe, stretch or gaze out the window—throughout your workday mitigate decision fatigue, enhance energy and reset your brain.

-10. Brain foods. Proteins—such as meats, poultry, dairy, cheese and eggs—give your brain the amino acids it needs to create neurotransmitter pathways. Omega-3 fatty acids such as salmon, mackerel, tuna and sardines put your brain in a good mood. It likes vitamin B in eggs, whole grains, fish, avocados and citrus fruits.

-11. Brain fitness. Keeping the brain fit—with challenges such as puzzles, reading or learning a new skill—slows cognitive decline and prevents degenerative disorders.

-12. Resolved arguments. Scientists discovered that when you resolve an argument by day’s end, it significantly reduces brain stress. Your Brain Wants Order, Not Chaos, During The Holiday

The best gift you can give your brain this holiday season is order instead of chaos. You can find tips on how to mitigate holiday stress here . But at some point, you probably will have to perform several activities at once. If multitasking becomes a pattern, it can backfire. When you bounce between several job tasks at once, you’re forcing your brain to keep refocusing with each rebound and reducing productivity by up to 40%. Multitasking undermines productivity and neutralizes efficiency, overwhelming your brain and causing fractured thinking, lack of concentration and decision fatigue.

According to Ben Ahrens, neuroscience researcher and CEO of the neuroplasticity program re-origin , the research is clear that multitasking and context-switching come at a cognitive cost. He says it not only takes its toll on neurotransmitters such as dopamine, our “feel good” hormone that keeps us motivated and focused so we can close the tab on a challenging project, but our brains are not wired to adapt to long-term stress positively.

In neuroscience, Ahrens states, the most important thing we can do to reduce stress is to give the brain order. When we’re overwhelmed with decision-making, the brain moves back and forth between the central executive network and the default mode network, he explains. Rather than let our brains activate the default mode network— which is when we’re operating on autopilot and mulling over everything we have to do rather than doing it—there are tools we can use to activate the central executive network and bring order to the brain.

> Use 3 x 3 to simplify your goals: The brain likes to think in threes, so aim to have no more than three goals per week and three smaller goals each day to set realistic expectations.

Focus on the one thing to get the ball rolling. If you need help figuring out where to start, focus on the one thing that, once completed, will make […]

Brain Health Doesn’t Have to Make You Puzzled

If you’re like most of us, as we age, you’re looking for ways to improve your focus and concentration. There is an awareness, whether in the news or talk among friends about memory problems like dementia and Alzheimers. You may even find yourself “checking in” to make sure that you are not forgetting things. You may also start to notice brain fog and problems concentrating. Is this age related or something we fix? Concentration and memory

When I was younger, I never had to worry about my concentration or memory. If I had to take a test, I was always sharp. Somewhere along the line (I have forgotten when) I started to lose some of that sharp, quick mind. I have days when I battle a bit of “fog”. I notice that I struggle to stay focused on a project. Natural alternatives

Thankfully, there are natural alternatives that can be effective without the negative side effects of prescriptions. Let’s take a look at amino acids for brain health. So, we’re not focusing here on HOW we lose concentration due to poor diet or exposure to disruptors but instead, focusing on natural ways to support the brain. Amino acids

Amino acids are the building blocks of protein. They are essential for muscle growth, immune system function, and cognitive function. There are 20 different amino acids that the body needs in order to function properly. 11 of these amino acids can be produced by the body, while the other 9 must be obtained through food or supplements. Let’s focus on 3 that have been shown to be beneficial for brain function: tyrosine, glutamine, and choline. Please note that many other foods and supplements can be helpful. Tyrosine

Tyrosine supplementation may help increase your dopamine levels, neurotransmitters, adrenalin and norepinephrine. Some call it a “brain-booster” because it may help increase alertness and improve cognitive performance. It is being studied to see if it helps with depression. The FDA recognizes it as safe for most but it could react with other medications. Glutamine