Learn about brain health and nootropics to boost brain function

Cocoa Could Boost Brains of Older Adults With Poor Diets

As we get older, we have to work harder to protect our brain and cognitive function. Some of us play the crossword or learn new skills, but new research is beginning to show that our diet might also play an important role.

Several studies have pointed towards cocoa extract as having a potential protective effect on cognition in older adults. However, the results of these trials have so far been inconclusive. Now, researchers from Mass General Brigham have investigated these effects further in a large-scale, placebo-controlled clinical trial and identified one group who may be particularly suited to cocoa supplementation.

“Cocoa extract is rich in biologically active compounds, particularly flavanols, which have potential positive effects on cognitive function,” study authors Chirag Vyas and Olivia Okereke told Newsweek . “Specifically, cocoa extract could boost cognition by providing antioxidants and reducing inflammation, and by improving blood flow, which helps the brain function better.” Cocoa beans To investigate these effects, the team studied a subset of 573 older individuals taken from the Cocoa Supplement and Multivitamin Outcomes Study (COSMOS)—a long-term study of over 21,000 older adults to investigate the impacts of cocoa supplementation and multivitamins on senior health.

In this subset study, published in The American Journal of Clinical Nutrition , participants were given 500mg of cocoa extract per day over the course of two years. At the start and end of the study, participants were asked to complete a series of tasks to test their overall cognition, memory and attention.

Overall, they did not find any significant association between cocoa supplementation and cognition. But one group of individuals did see some positive effects. “While there were no differences between cocoa extract and placebo when looking at cognitive function in the entire clinic sample, we found that cocoa extract resulted in better cognitive function among those who had lower diet at study enrolment,” Vyas and Okereke said. “So, our findings add support to the idea that cocoa flavanols can boost cognitive function among the at-risk group of older people who have lower diet quality.”

It is important to note here that this cocoa supplementation is not the same as eating a bar of chocolate every day. “[Our] cocoa extract supplement that contains levels of cocoa flavanols that greatly surpass what a person could consume from chocolate without adding excessive calories to their diet,” Vyas and Okereke said. More work needs to be done to investigate this relationship and the potential therapeutic use of cocoa supplementation for at-risk older adults, but this study marks an important step in the research on this topic.

“Our findings shed light on the potential benefits of cocoa extract supplement for cognitive function among older people with lower diet quality,” Vyas and Okereke said. “This is important because some older people, for health or other reasons, may be facing the issue of low diet quality.

Sign up for Newsweek’s daily headlines

“Also, it would be interesting for future studies to replicate our findings and to investigate further some of the mechanisms by which cocoa extract influences cognitive function.”

Is there a health issue that’s worrying you? Do you have a question about brain health? Let us know via health@newsweek.com. We can ask experts for advice, and your story could be featured on Newsweek .

Request Reprint & Licensing Submit Correction View Editorial Guidelines

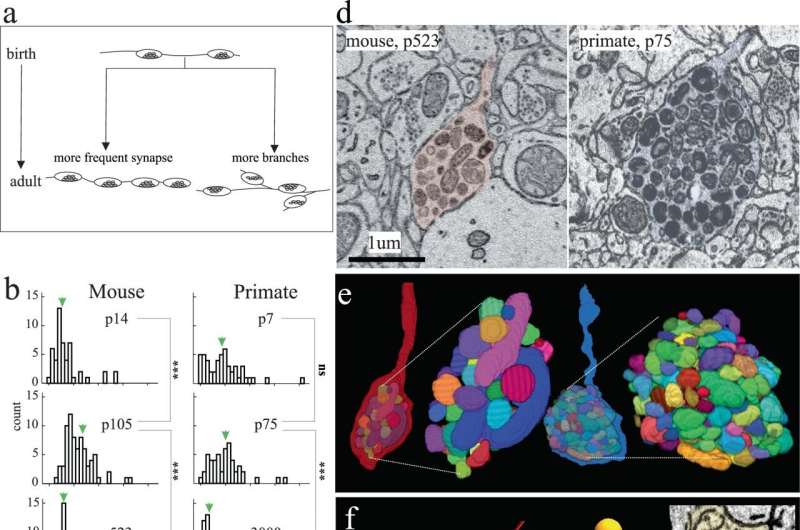

When do brains grow up? Research shows mouse and primate brains mature at same pace

Excitatory axon development in mouse and primate. a Cartoon depicting hypothetical models of excitatory axon development: left, axons increase their synapse frequency and/or right, axons make more branches. b, c Histograms of the number of synapses/µm and branches/µm, respectively, of excitatory axons at different ages in mouse (left) and primate (right) in V1 L2/3. Green arrows indicate the ∼mean. d Single 2D EM images and e 3D reconstructions of a representative terminal axon retraction bulbs in mouse V1, p523 (left) and primate V1, p75 (right). 3D reconstructions show individually colored mitochondria contained within the retraction bulb. f Skeleton reconstructions of mouse (red) and primate (blue) axons containing terminal retraction bulbs (asterisk) (from d) and spine (orange circle) or shaft (green circle) synapses. Insets: 2D EM images of spine and shaft synapses made by the primate (right) and mouse (bottom) axon containing a retraction bulb. Credit: Nature Communications (2023). DOI: 10.1038/s41467-023-43088-3 An Argonne study has revealed that short-lived mice and longer-living primates develop brain synapses on the exact same timeline, challenging assumptions about disease and aging. What does this mean for humans—and yesterday’s research?

Mice typically live two years and monkeys live 25 years, but the brains of both appear to develop their synapses at the same time. This finding, the result of a recent study led by neuroscientist Bobby Kasthuri of the U.S. Department of Energy’s (DOE) Argonne National Laboratory and his colleagues at the University of Chicago, is a shock for neuroscientists. The article is published in the journal Nature Communications .

Until now, brain development was understood as happening faster in mice than in other, longer-living mammals such as primates and humans. Those studying the brain of a 2-month-old mouse, for example, assumed the brain was already finished developing because it had a shorter overall lifespan in which to develop. In contrast, the brain of a 2-month-old primate was still considered going through developmental changes. Accordingly, the 2-month-old mouse brain was not considered a good comparison model to that of a 2-month-old primate.

That assumption appears to be completely wrong, which the authors think will call into question many results using young mouse brain data as the basis for research into various human conditions, including autism and other neurodevelopmental disorders.

“A fundamental question in neuroscience, especially in mammalian brains, is how do brains grow up?” said Kasthuri. “It turns out that mammalian brains mature at the same rate, at every absolute stage. We are going to have to rethink aging and development now that we find it’s the same clock.”

Gregg Wildenberg is a staff scientist at The University of Chicago and the lead author of the study along with Kasthuri and graduate students Hanyu Li, Vandana Sampathkumar and Anastasia Sorokina. He looked closely at the neurons and synapses firing in the brains of very young mice. He marveled that the baby mouse crawled, ate and behaved just as one would expect despite having next to no measurable connections in its brain circuitry.

“I think I found one synapse along an entire neuron, and that is shocking,” said Wildenberg. “This living baby animal was existing outside of the womb six days after birth, behaving and experiencing the world without any of its brain’s neurons actually connected to each other. We have to be careful about overinterpreting our results, but it’s fascinating.”

Brain neurons are different than every other organ’s cells’ neurons because brain cells are post-mitotic, meaning they never divide. All other cells in the body—liver, stomach, heart, skin and so on—divide, get replaced and deteriorate over the course of a lifetime. This process begins at development and ultimately transitions into aging. The brain however, is the only mammalian organ that has essentially the same cells on the first and the last day of life.

Complicating matters, early embryonic cells of every species appear identical. If fish, mouse, primate and human embryos were all together in a petri dish, it would be virtually impossible to figure out which embryo would develop into what species. At some mysterious point, a developmental programming change happens within an embryo and only one specific species emerges. Scientists would like to understand the role of brain cells in brain development as well as in the physical development within species.

Kasthuri and his team were able to advance their recent discovery thanks to the Argonne Leadership Computing Facility (ALCF), a DOE Office of Science user facility. The ALCF is able to handle enormous datasets—”terabytes, terabytes and more terabytes of data,” said Kasthuri—to look at brain cells at the nanoscale. The researchers used the supercomputer facility to look at every neuron and count every synapse across multiple brain samples at multiple ages of the two species. Collecting and analyzing that level of data would have been impossible, said Kasthuri, without the ALCF.

Kasthuri knows many scientists will want more data to confirm the findings of the recent study. He himself is reconsidering past research results in the context of the new information.

“One of the previous studies we did was comparing an adult mouse brain to an adult primate brain. We thought primates are smarter than mice so every neuron should have more connections, be more flexible, have more routes and so on,” he explained. “We found that the exact opposite is true. Primate neurons have way fewer connections than mouse neurons. Now, looking back, we thought we were comparing similar species but we were not. We were comparing a 3-month-old mouse to a 5-year-old primate.”

The research’s implications for humans is blurry. For one thing, behaviorally, humans develop more slowly than other species. For example, many four-legged mammals can walk within the first hour of life whereas humans frequently take more than a year before walking first steps. Are the rules and pace of synaptic development different in human brains compared to other mammalian brains ?

“We believe something remarkable, something magical, will be revealed when we are able to look at human tissues,” said Kasthuri, who suspects humans may be on a different schedule altogether. “That’s where the clock that is the same for […]

Unlocking the secrets of the brain’s dopaminergic system

A new organoid model of the dopaminergic system sheds lights on its intricate functionality and potential implications for Parkinson’s disease. The model, developed by the group of Jürgen Knoblich at the Institute of Molecular Biotechnology (IMBA) of the Austrian Academy of Sciences, replicates the dopaminergic system’s structure, connectivity, and functionality. The study, published on December 5 in Nature Methods, also uncovers the enduring effects of chronic cocaine exposure on the dopaminergic circuit, even after withdrawal.

A completed run, the early morning hit of caffeine, the smell of cookies in the oven — these rewarding moments are all due to a hit of the neurotransmitter dopamine, released by neurons in a neural network in our brain, called the “dopaminergic reward pathway.” Apart from mediating the feeling of “reward,” dopaminergic neurons also play a crucial role in fine motor control, which is lost in diseases such as Parkinson’s disease. Despite dopamine’s importance, key features of the system are not yet understood, and no cure for Parkinson’s disease exists. In their new study, the group of Jürgen Knoblich at IMBA developed an organoid model of the dopaminergic system, which not only recapitulates the system’s morphology and nerve projections, but also its functionality.

A model of Parkinson’s disease

Tremor and a loss of motor control are characteristic symptoms of Parkinson’s disease and are due to a loss of neurons that release the neurotransmitter dopamine, called dopaminergic neurons. When dopaminergic neurons die, fine motor control is lost and patients develop tremors and uncontrollable movements. Although the loss of dopaminergic neurons is crucial in the development of Parkinson’s disease, the mechanisms how this happens, and how we can prevent — or even repair — the dopaminergic system is not yet understood.

Animal models for Parkinson’s disease have provided some insight into Parkinsons disease, however as rodents do not naturally develop Parkinson’s disease, animal studies proved unsatisfactory in recapitulating hallmark features of the disease. In addition, the human brain contains many more dopaminergic neurons, which also wire up differently within the human brain, sending projections to the striatum and the cortex. “We sought to develop an in vitro model that recapitulates these human features in so called brain organoids,” explains Daniel Reumann, previously a PhD student in the lab of Jürgen Knoblich at IMBA, and first author of the paper. “Brain organoids are human stem cell derived three-dimensional structures, which can be used to understand both human brain development, as well as function,” he explains further.

The team first developed organoid models of the so-called ventral midbrain, striatum and cortex — the regions linked by neurons in the dopaminergic system — and then developed a method for fusing these organoids together. As happens in the human brain, the dopaminergic neurons of the midbrain organoid send out projections to the striatum and the cortex organoids. “Somewhat surprisingly, we observed a high level of dopaminergic innervation, as well as synapses forming between dopaminergic neurons and neurons in striatum and cortex,” Reumann recalls.

To assess whether these neurons and synapses are functional, the team collaborated with Cedric Bardy’s group at SAHMRI and Flinders University, Australia, to investigate if neurons in this system would start to form functional neural networks. And indeed, when the researchers stimulated the midbrain which contains dopaminergic neurons, neurons in the striatum and cortex responded to the stimulation. “We successfully modelled the dopaminergic circuit in vitro, as the cells not only wire correctly, but also function together,” Reumann sums up.

The organoid model of the dopaminergic system could be used to improve cell therapies for Parkinson’s disease. In first clinical studies, researchers have injected precursors of dopaminergic neurons into the striatum, to try and make up for the lost natural innervation. However, these studies have had mixed success. In collaboration with the lab of Malin Parmar at Lund University, Sweden, the team demonstrated that dopaminergic progenitor cells injected into the dopaminergic organoid model mature into neurons and extend neuronal projections within the organoid. “Our organoid system could serve as a platform to test conditions for cell therapies, allowing us to observe how precursor cells behave in a three-dimensional human environment,” Jürgen Knoblich, the study’s corresponding author, explains. “This allows researchers to study how progenitors can be differentiated more efficiently and provides a platform which allows to study how to recruit dopaminergic axons to target regions, all in a high-throughput manner.”

Insights into the reward system

Dopaminergic neurons also fire whenever we feel rewarded, thus forming the basis of the “reward pathway” in our brains. But what happens when dopaminergic signaling is perturbed, such as in addiction? To investigate this question, the researchers made use of a well-known dopamine reuptake inhibitor, cocaine. When the organoids were exposed to cocaine chronically, over 80 days, the dopaminergic circuit changed functionally, morphologically and transcriptionally. These changes persisted, even when cocaine exposure was stopped 25 days before the end of the experiment, which simulated the withdrawal condition. “Even after almost a month after stopping cocaine exposure, the effects of cocaine on the dopaminergic circuit were still visible, which means that we can now investigate what the long-term effects of dopaminergic overstimulation are in a human-specific in vitro system,” Reumann summarizes. RELATED TOPICS Health & Medicine Parkinson’s Research

Nervous System

Diseases and Conditions

Mind & Brain Neuroscience

Disorders and Syndromes

Parkinson’s

Brain Injury

RELATED TERMS Artificial neural network

Parkinson’s disease

Biological psychiatry

Deep brain stimulation

Circulatory system

Neuroscience

Excitotoxicity and cell damage

Story Source:

Materials provided by IMBA- Institute of Molecular Biotechnology of the Austrian Academy of Sciences . Note: Content may be edited for style and length.

What’s your Brain Care Score? The answer may indicate your dementia risk

Get inspired by a weekly roundup on living well, made simple. Sign up for CNN’s Life, But Better newsletter for information and tools designed to improve your well-being .

CNN —

What if you could assess your risk of developing dementia or having a stroke as you age without medical procedures? A new tool named the Brain Care Score, or BCS, may help you do just that while also advising how you can lower your risk, a new study has found.

The 21-point Brain Care Score refers to how a person fares on 12 health-related factors concerning physical, lifestyle and social-emotional components of health, according to the study published December 1 in the journal Frontiers in Neurology . The authors found participants with a higher score had a lower risk of developing dementia or having a stroke later in life.

Walking could lower your risk of type 2 diabetes, and your speed may affect how much, study finds

“Patients and practitioners can start focusing more on improving their BCS today, and the good news is improving on these elements will also provide overall health benefits,” said the study’s senior author Dr. Jonathan Rosand, cofounder of the McCance Center for Brain Health at Massachusetts General Hospital, in a news release .

“The components of the BCS include recommendations found in the American Heart Association’s Life’s Essential (8) for cardiovascular health, as well (as) many modifiable risk factors for common cancers,” added Rosand, also the J.P. Kistler Endowed Chair in Neurology at Massachusetts General Hospital and a professor of neurology at Harvard Medical School. “What’s good for the brain is good for the heart and the rest of the body.”

The physical components included blood pressure, cholesterol, hemoglobin A1c and body mass index, while lifestyle factors included nutrition, alcohol consumption, aerobic activities, sleep and smoking. Social-emotional aspects referred to relationships, stress management and meaning in life.

The authors cited “the global brain health crisis” as one of the motivators for their work; in the United States alone, 1 in 7 people have dementia, and every four minutes someone dies from a stroke, according to the study. Prevention efforts can help substantially reduce deaths, but the American Heart Association’s Life’s Essential 8, the authors said, was developed without input from patients.

Five weird signs of sleep apnea

“In order to engage patients, we sought to develop a tool that responded to the question we received most frequently from our patients and their family members: ‘How can I take good care of my brain?’” the authors said. Brain care and disease risk

The researchers sought to validate their tool by looking into the associations between nearly 400,000 participants’ Brain Care Score at the beginning of the UK Biobank study between 2006 and 2010, and whether they had dementia or stroke around 12 years later. The UK Biobank study has followed the health outcomes of more than half a million people between ages 40 and 69 in the United Kingdom for at least 10 years.

Among adults who were younger than 50 upon enrollment, every five-point positive difference in their score was associated with a 59% lower risk of developing dementia and a 48% lower risk of having a stroke later in life, the authors found. Those in their 50s had a 32% lower risk of dementia and a 52% lower chance of stroke. Participants older than 59 had the lowest estimates, with an 8% lower risk of dementia and a 33% lower risk of stroke.

Is there such a thing as too much gratitude? Turns out sometimes less is more

“It’s rare for me to say this, but everyone age 40 and above who has a family member affected by Alzheimer’s or dementia should talk to their doctor about this score,” said Dr. Richard Isaacson, director of research at the Institute for Neurodegenerative Diseases in Florida, via email. Isaacson wasn’t involved in the study.

“Most people are unaware that at least 40% of dementia cases may be preventable if that person does everything right,” Isaacson added. “The Brain Care Score helps people at risk with a roadmap forward based on 12 modifiable factors before the onset of cognitive decline.” Caring for your brain

The authors concluded the less significant benefits for older adults could be because in this age group, dementia tends to progress more slowly — meaning practitioners may not pick up on a patient having early dementia until it gets worse later.

But in terms of explaining the overall findings, many past studies have affirmed the benefits of these health components for brain health.

Swapping processed foods for more natural choices has been associated with a 34% lower risk of dementia, while frequent exercise and daily visits with loved ones reduced risk by 35% and 15%, respectively, according to two studies published in 2022.

And “while most of these factors can be evaluated by people at home, it’s also important for people at their next primary care doctor’s visit to ‘know their numbers’ in the areas of blood pressure, cholesterol and blood sugar control,” which are other factors in the Brain Care Score, Isaacson said. “Better control of these vascular risk factors has the power to slam the breaks on the road to cognitive decline (and) dementia, as well as stroke.”

How much ultraprocessed food are you eating?

He added, “When you combine this with better dietary choices, less alcohol, having a sense of purpose in life and staying socially engaged, the dividends add up greatly over time.”

Since participants’ scores were measured only once in their lives, more research is needed to find whether someone can reduce their stroke or dementia risk by improving their Brain Care Score over time — a hypothesis the authors are currently studying, they said.

“We have every reason to believe that improving your BCS over time will substantially reduce your risk of ever having a stroke or developing dementia in the future,” Rosand said. “But as scientists, we always want to see proof.”

Participating in studies like this one can be a good way […]

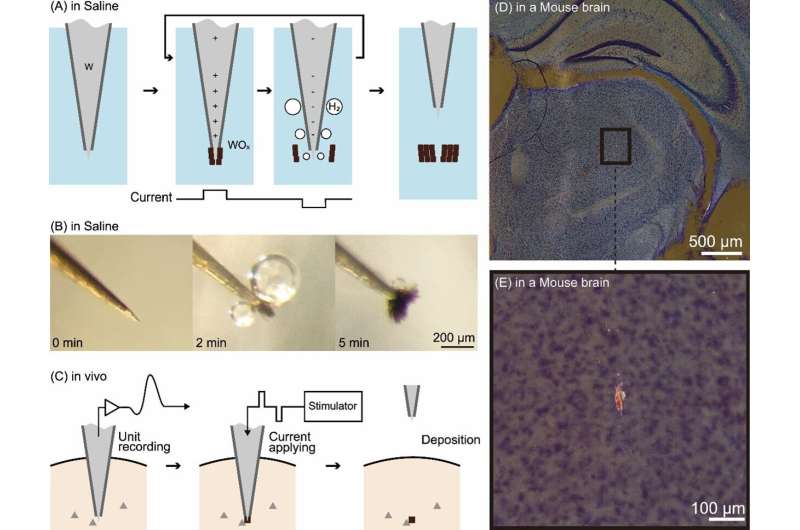

Developing technology to place minute ‘marks’ in the brain

(A) Principle of the technology. (B) Electrolysis of a tungsten electrode in physiological saline. The black lumps are oxides. (C) Procedure for applying this technology in vivo. (D) Dark-field observation image of a slice of a mouse brain in which this technology was applied in deep brain regions. (E) Enlarged view of the black-bounded area in (D). The glowing red mass is an oxide mark. Credit: Toyohashi University of Technology. Researchers have developed a technology capable of deploying very small “marks” in regions of the brain where activity has been recorded.

These marks are created by passing a microcurrent through tungsten electrodes inserted in the brain to record brain activity . These marks do not damage brain tissue and can remain safely inside the brain of a living organism. This technology enables a high-resolution visualization of the brain-wide distribution of neurons with particular functional characteristics. The results of this research were published in eNeuro .

Studies involving recording the activities of deep brain regions, such as the hippocampus and thalamus, commonly utilize needle-like microelectrodes penetrating into the brain to record the electrical activity of neurons. These electrodes have the advantage of higher spatiotemporal resolution than fMRI or EEG recordings and are capable of recording signals from the tissues of deep brain region.

However, one disadvantage of using these electrodes is that it becomes quite difficult to ascertain where exactly in the brain the electrode tip was situated after it has been removed. To address this shortcoming, a mark placement method called “marking” has been widely used. However, these existing methods have limited spatial resolution and cause damage to brain tissue. Therefore, the maximum degree of spatial precision possible with conventional experimental methods remains approximately 0.1 mm.

Tatsuya Oikawa, Toshimitsu Hara, Kento Nomura, along with Associate Professor Kowa Koida of the Department of Computer Science and Engineering, Toyohashi University of Technology, contributed to the study. Oikawa and his colleagues focused on the electrolysis of tungsten, a metal commonly used to make the aforementioned needle-like electrodes. They developed a method wherein electrolysis was employed to deposit oxidized tungsten within the tissues of the deep brain region.

When electrolyzed, the tungsten electrode generates metal oxide and hydrogen bubbles at positive and negative currents, respectively. By rapidly alternating between positive and negative currents, metal oxide is repeatedly generated at the tip of the tungsten electrode, followed by detachment by hydrogen bubbles; this results in the formation of a small lump of oxide. This lump of oxide serves as a mark indicating the precise location of the electrode tip.

Oikawa and his colleagues used the brains of mice and monkeys as models to generate minute oxide lumps (as small as 20 µm in diameter) at the electrode tip and confirmed that they could be deposited within the brain. They also confirmed that the current used to generate these marks and the deposited oxides do not damage brain tissue.

Furthermore, they made the noteworthy discovery that the deposited oxides appear to glow red when stained using a standard brain tissue stain (Nissl staining) and observed under a microscope using dark-field illumination. This distinctive characteristic clearly differentiates the marks from surrounding noise, facilitating the easy identification of small markings through low-power microscopy. Development background

Associate Professor Kowa Koida, the advisor to Oikawa and his colleagues, said, “Originally, our plan was to remove plated coatings applied in advance to the electrodes and utilize them as marks. Unfortunately, a mix-up occurred during experimentation, leading us to unintentionally employ an incompletely plated electrode. Surprisingly, despite this setback, we observed the formation of a mark. Upon closer consideration, we recognized that electrolysis, which is commonly used to form tungsten rods into thin needles for electrodes, produces an oxide powder.”

“That powder can become a mark. We also realized that electrolysis can be performed in vivo. Furthermore, we found that the current parameters necessary for electrolysis can align with those employed when activating neurons through current delivered via the electrode. Essentially, the safety of the organism marked using our method has already been validated in previous experiments involving current stimulation. While it has been widely known that current stimulation results in the wear and tear of electrode tips, what remained unnoticed was that simultaneously, metal oxides are deposited in the body, serving as marks.” Future outlook

Now, Oikawa and his colleagues are applying this technology to unravel the microscopic functional structure of the lateral geniculate nucleus, a visual center deep in the brain of macaque monkeys. By providing examples of effective applications of this technology, they aim to foster its widespread adoption as a fundamental technique in neuroscience.

Furthermore, this processing technique, utilizing in vivo metal electrolysis for deposition, has been used in the medical field, such as the treatment of aneurysms using embolization coils. Therefore, electrolysis in vivo has already garnered a certain level of trust. By combining both the measurement and deposition processes into a unified technique using microelectrodes, the technologies used in this study may be applied to medical care as well.

Provided by Toyohashi University of Technology

A small study offers hope for people with traumatic brain injuries

ARI SHAPIRO, HOST:

A severe traumatic brain injury often causes lasting damage.

GINA ARATA: I couldn’t get a job because if I was, like, say, a waitress and you put in an order, I would forget. I couldn’t remember to get you, like, a Diet Pepsi.

SHAPIRO: Now a small study offers hope. NPR’s Jon Hamilton reports on how precisely targeted deep brain stimulation appears to help injured brains work better.

JON HAMILTON, BYLINE: Gina Arata’s brain was injured when she was 22 and driving to a wedding shower.

ARATA: I was, like, maybe a minute from my house. And I just had hydroplaned over a water puddle, and I swerved towards trees.

HAMILTON: Arata spent 14 days in a coma. Then came the hard part.

ARATA: I had to learn everything again – how to button my pants, how to shave my legs, how to feed myself – because right after the accident, I couldn’t use my left side of my body.

HAMILTON: Arata’s physical abilities improved, but her focus and memory remained unreliable. And she had no filter, which was awkward when she went on dates. Fifteen years passed with little change. During that time, Dr. Nicholas Schiff at Weill Cornell Medicine in New York was studying people with moderate to severe traumatic brain injuries. He noticed a problem with connections to parts of the frontal cortex.

NICHOLAS SCHIFF: Although the people were able to wake up, have a day, live on their own, they couldn’t go back to school and work because some of the resources in those same parts of the frontal cortex were unavailable to them.

HAMILTON: Which meant they had limited use of brain areas involved in planning, focus and self-control. In 2007, Schiff had been part of a team that used deep brain stimulation to help a patient in a minimally conscious state become more aware and responsive. Nearly a decade later, Schiff wanted to see if a similar approach could help people like Gina Arata.

SCHIFF: What we didn’t know, of course, was, would this work in people who had moderate to severe brain injury? And if it did basically work the way we expected it to work physiologically, would it make a difference?

HAMILTON: To find out, Schiff collaborated with Dr. Jaimie Henderson, a neurosurgeon at Stanford. Henderson’s job was to implant tiny electrodes deep in the brain.

JAIMIE HENDERSON: There is this very small, very difficult-to-target region right in the middle of a relay station in the brain called the thalamus.

HAMILTON: The region plays a critical role in determining our level of consciousness. The team hoped that stimulating it would help people whose brains hadn’t fully recovered from a traumatic injury. So starting in 2018, Henderson operated on five patients, including Arata.

HENDERSON: Once we put the wires in, we then took those wires up to a pacemaker like device that’s implanted in the chest, and then that device can be programmed externally.

HAMILTON: Henderson says overall, patients were able to complete a cognitive task more than 30% faster.

HENDERSON: Everybody got better, and some people got dramatically better, better than we would really ever expect with any other kind of intervention.

HAMILTON: Gina Arata realized how much difference her implanted stimulator made during a testing session with Dr. Schiff.

ARATA: He asked me to name things in the produce aisle, and I could just rattle them off like, ooh, lettuce, eggplant, apples, bananas. I was just flying through.

HAMILTON: Then Schiff changed the setting and asked her about another grocery store aisle. She couldn’t name a single item. The results, which appear in the journal Nature Medicine, are promising, says Deborah Little, a psychologist at UTHealth in Houston.

DEBORAH LITTLE: What this study shows is that they potentially can make a difference years out from injury.

HAMILTON: Little, the research director at the Trauma and Resilience Center at UTHealth, says it’s still not clear whether the approach is feasible for large numbers of patients. But she says if it is, stimulation might help some of the people who have run out of rehabilitation options.

LITTLE: We don’t have a lot of tools to offer them. I get calls from patients from previous studies, even, who will call me and ask if there’s anything new they can try.

HAMILTON: Little says some patients’ lives could be changed if they experience the sort of improvement found in the study.

LITTLE: Even a 10% change in function can make the difference between being able to return to your job or not.HAMILTON: Gina Arata, who is 45 now, hasn’t landed a job yet, but she says her implanted stimulator is allowing her to do other things like read books and maintain relationships.ARATA: It’s on right now. I’ve had my battery changed and upgraded. I have a telephone to turn it on and off or check the battery lifespan. It’s awesome.HAMILTON: The researchers are already planning a larger trial of the approach. Jon Hamilton, NPR News.(SOUNDBITE OF JEAN CARNE SONG, “VISIONS”) Transcript provided by NPR, Copyright NPR. NPR transcripts are created on a rush deadline by an NPR contractor. This text may not be in its final form and may be updated or revised in the future. Accuracy and availability may vary. The authoritative record of NPR’s programming is the audio record.

Experimental deep brain stimulation offers hope to treat cognitive deficits after traumatic brain injuries

Five people who had life-altering, seemingly irreversible cognitive deficits following moderate to severe traumatic brain injuries showed substantial improvements in their cognition and quality of life after receiving an experimental form of deep brain stimulation (DBS) in a phase 1 clinical trial. The trial, reported Dec. 4 in Nature Medicine, was led by investigators at Weill Cornell Medicine, Stanford University, the Cleveland Clinic, Harvard Medical School and the University of Utah.

The findings pave the way for larger clinical trials of the DBS technique and offer hope that cognitive deficits associated with disability following traumatic brain injury (TBI) may be treatable, even many years after the injury.

The DBS stimulation, administered for 12 hours a day, targeted a brain region called the thalamus. After three months of treatment, all the participants scored higher on a standard test of executive function that involves mental control, with the improvements ranging from 15 to 55 percent.

The participants also markedly improved on secondary measures of attention and other executive functions. Several of the participants and their family members reported substantial quality of life gains, including improvements in the ability to work and to participate in social activities, according to a report describing participant and family perspectives from the trial. Dr. Joseph Fins, the E. William Davis, Jr., MD Professor of Medical Ethics at Weill Cornell Medicine, led that research effort.

“The ability to keep your focus and ignore the other things that aren’t important to focus on is very, very important to a lot of things in life,” one participant said in the report. “You never know what a blessing it is until you get it the second time.”

“These participants had experienced brain injury years to decades before, and it was thought that whatever recovery process was possible had already played out, so we were surprised and pleased to see how much they improved,” said study co-senior author Dr. Nicholas Schiff, the Jerold B. Katz Professor of Neurology and Neuroscience in the Feil Family Brain and Mind Research Institute at Weill Cornell Medicine. Our aim now is to expand this trial, to confirm the effectiveness of our DBS technique, and to see how broadly it can be applied to TBI patients with chronic cognitive deficits.” Dr. Jaimie Henderson, study co-senior author, the John and Jene Blume – Robert and Ruth Halperin Professor in the Department of Neurosurgery at Stanford University School of Medicine A cognitive pacemaker?

Recent estimates by the Centers for Disease Control & Prevention suggest that in the United States alone, TBI accounts for more than 200,000 hospitalizations and more than 60,000 deaths annually, with roughly five million Americans suffering from long-term, TBI-related cognitive disability.

This chronic disability typically involves memory, attention and other cognitive deficits along with related personality changes, which together impair the individuals’ social relationships, ability to work and overall ability to function independently. Traditionally, researchers have assumed that these problems stem from irreversible brain-cell loss and are thus untreatable.

However, Dr. Schiff’s work has shown that activity in a specific brain circuit, which the investigators termed the ‘mesocircuit,’ underlies deficits in attention, planning and other abilities known as executive cognitive functions, after TBI, and that such functions may be at least partially recoverable. A brain region called the central thalamus normally serves as a kind of energy regulator for this cognitive circuit. Though this region is generally damaged by a TBI, stimulating it via DBS may restore its activity, and thus reactivate the cognitive circuits it serves.

“Basically, our idea has been to overdrive this part of the thalamus to restore brain function, much as a cardiac pacemaker works to restore heart function,” Dr. Schiff said.

In a 2007 study, Dr. Schiff and colleagues showed that DBS targeting the central thalamus markedly improved measures of cognition and behavior in a person with severe TBI who had been in a minimally conscious state for six years. That success, and related preclinical research, led to the new study. “Every day is a battle”

The participants in the new study, four men and one woman, had regained independence in daily function, but cognitive deficits involving executive functions, stemming from TBIs three to 18 years prior, prevented return to pre-injury levels of work, academic study and social activities.

“Every day is a battle, although I try and be upbeat,” one male participant told the researchers in an interview conducted prior to the treatment. “[I]t’s not the quality of life I really want to live. And so, I’m willing to take the chance, to even get—if I can even get five or 10 percent better . . .”

“Despite decades of costly research, we have barely moved the needle in preventing or reducing TBI-related disability. Our results, although preliminary, suggest that DBS may improve cognitive function well into the chronic phase of recovery,” said Dr. Joseph Giacino, a neuropsychologist who helped design the trial, and served as co-first author of the 2007 study. Dr. Giacino is the director of rehabilitation neuropsychology at Spaulding Rehabilitation Hospital, a staff neuropsychologist at Massachusetts General Hospital and a professor in the Department of Physical Medicine and Rehabilitation at Harvard Medical School. The surgery

Dr. Henderson and his surgical team implanted each subject with DBS electrodes positioned to stimulate a part of the thalamus called the central lateral (CL) thalamic nucleus, along with an associated bundle of nerve fibers.

The implantation was guided by brain imaging (MRI and CT) of each subject. One type of MRI was processed with a novel algorithm developed by Dr. Brian Rutt’s group at Stanford to provide high-resolution outlines of CL and other relevant thalamic nuclei, which were then combined with 3D maps of nerve fiber tracts passing through these nuclei. Computer models of how DBS electrodes would interact with CL neurons and nerve fibers were developed by biomedical engineer Dr. Christopher Butson and colleagues, who were at the University of Utah during the study.

“Participant-specific models were used to create pre-operative surgical plans and guide post-surgical DBS programming to maximize activation of CL brain circuits while minimizing activation of surrounding thalamic circuits,” […]

In a first, human study shows how dopamine teaches our brain new tricks

More than just the ‘rewards’ chemical, dopamine helps our brains avoid negative experiences based on previous ‘punishment’ For the first time, dopamine regulation has been mapped in real time, deep inside the brains of three humans, revealing how the brain neurotransmitter plays an essential role in not just recognizing rewards but learning from mistakes.

Researchers from Wake Forest University School of Medicine (WFUSM) have unlocked crucial information about the brain’s decision-making mechanisms in a new study that could help us better understand how dopamine signaling differs in psychiatric and neurological disorders .

More Stories

A simulated segment of the large-scale structure of the universe – the Milky Way is located in a “supervoid” containing relatively little matter, and this could explain a major cosmic conundrum

“Previously, research has shown that dopamine plays an important role in how animals learn from ‘rewarding’ (and possibly ‘punishing’) experiences,” said Dr Kenneth T. Kishida, associate professor of physiology and pharmacology and neurosurgery at WFUSM. “But little work has been done to directly assess what dopamine does on fast timescales in the human brain.

“This is the first study in humans to examine how dopamine encodes rewards and punishments and whether dopamine reflects an ‘optimal’ teaching signal that is used in today’s most advanced artificial intelligence research.”

For the study, the researchers used fast-scan cyclic voltammetry, paired with machine learning to measure dopamine levels in real time. Because this can only be done during invasive surgery, three patients scheduled to receive this kind of treatment – deep brain stimulation for essential tremor – were able to take part in the research.

A carbon fiber microelectrode was inserted deep into the brains of the participants to monitor dopamine in the striatum, the area of the brain involved in decision making, habit formation and reward.

They were then tasked with playing a simple computer game that had three stages requiring the participants to learn through experience to make choices that maximized rewards while minimizing punishments. The players were rewarded with real monetary prizes for making the correct decisions and lost money as a penalty for wrong moves. Dopamine was measured once every 100 milliseconds in each player, across all stages of the game.

And what they found was something quite unexpected: The dopamine pathway may be far more multifaceted and complex than we thought, playing as much a role in processing losses as it does wins. And these pathways operate on a different timescale.

“We found that dopamine not only plays a role in signaling both positive and negative experiences in the brain, but it seems to do so in a way that is optimal when trying to learn from those outcomes,” said Kishida. “What was also interesting, is that it seems like there may be independent pathways in the brain that separately engage the dopamine system for rewarding versus punishing experiences. Our results reveal a surprising result that these two pathways may encode rewarding and punishing experiences on slightly shifted timescales separated by only 200 to 400 milliseconds in time.”

The study indicates that dopamine is a key factor in how we learn from our experiences – good and bad – helping our brain adapt behaviors to make choices tied to positive outcomes.

“Traditionally, dopamine is often referred to as ‘the pleasure neurotransmitter ,”’ Kishida said. “However, our work provides evidence that this is not the way to think about dopamine. Instead, dopamine is a crucial part of a sophisticated system that teaches our brain and guides our behavior. That dopamine is also involved in teaching our brain about punishing experiences is an important discovery and may provide new directions in research to help us better understand the mechanisms underlying depression, addiction, and related psychiatric and neurological disorders.”

The study was published in the journal Science Advances .

Source: Atrium Health Wake Forest Baptist

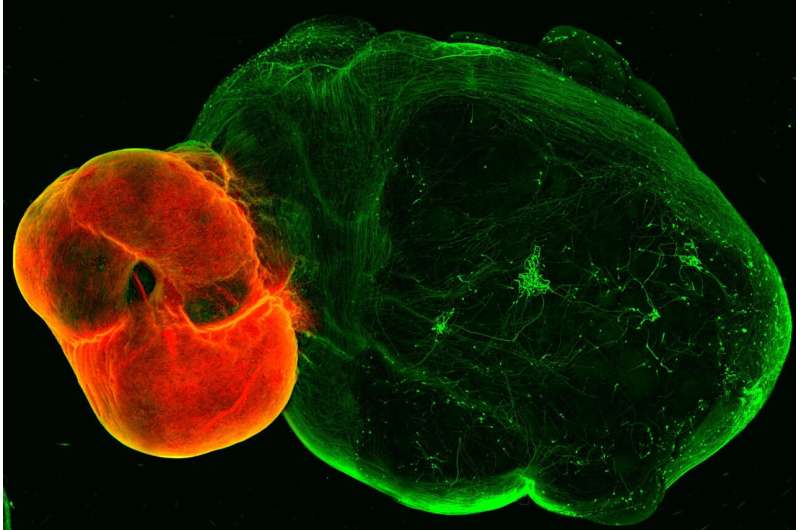

Unlocking the secrets of the brain’s dopaminergic system

by IMBA- Institute of Molecular Biotechnology of the Austrian Academy of Sciences Dopaminergic neurons in the ventral midbrain (red) and ventral midbrain projections into striatal and cortical tissue (green). Credit: (c) Daniel Reumann/IMBA A new organoid model of the dopaminergic system sheds lights on its intricate functionality and potential implications for Parkinson’s disease. The model, developed by the group of Jürgen Knoblich at the Institute of Molecular Biotechnology (IMBA) of the Austrian Academy of Sciences, replicates the dopaminergic system’s structure, connectivity, and functionality.

The study, published in Nature Methods , also uncovers the enduring effects of chronic cocaine exposure on the dopaminergic circuit, even after withdrawal.

A completed run, the early morning hit of caffeine, the smell of cookies in the oven—these rewarding moments are all due to a hit of the neurotransmitter dopamine, released by neurons in a neural network in our brain, called the “dopaminergic reward pathway.”

Apart from mediating the feeling of “reward,” dopaminergic neurons also play a crucial role in fine motor control, which is lost in diseases such as Parkinson’s disease. Despite dopamine’s importance, key features of the system are not yet understood, and no cure for Parkinson’s disease exists. In their new study, the group of Jürgen Knoblich at IMBA developed an organoid model of the dopaminergic system, which recapitulates not only the system’s morphology and nerve projections but also its functionality. A model of Parkinson’s disease

Tremor and a loss of motor control are characteristic symptoms of Parkinson’s disease and are due to a loss of neurons that release the neurotransmitter dopamine, called dopaminergic neurons. When dopaminergic neurons die, fine motor control is lost, and patients develop tremors and uncontrollable movements. Although the loss of dopaminergic neurons is crucial in the development of Parkinson’s disease, the mechanisms of how this happens and how we can prevent—or even repair—the dopaminergic system are not yet understood.

Animal models for Parkinson’s disease have provided some insight into Parkinson’s disease; however, as rodents do not naturally develop Parkinson’s disease, animal studies proved unsatisfactory in recapitulating hallmark features of the disease. In addition, the human brain contains many more dopaminergic neurons, which also wire up differently within the human brain, sending projections to the striatum and the cortex.

“We sought to develop an in vitro model that recapitulates these human features in so-called brain organoids ,” explains Daniel Reumann, previously a Ph.D. student in the lab of Jürgen Knoblich at IMBA and first author of the paper. “Brain organoids are human stem cell-derived three-dimensional structures, which can be used to understand both human brain development and function,” he explains further.

The team first developed organoid models of the so-called ventral midbrain, striatum, and cortex—the regions linked by neurons in the dopaminergic system—and then developed a method for fusing these organoids together. As happens in the human brain , the midbrain organoid’s dopaminergic neurons send projections to the striatum and the cortex organoids.

“Somewhat surprisingly, we observed a high level of dopaminergic innervation, as well as synapses forming between dopaminergic neurons and neurons in striatum and cortex,” Reumann recalls.

To assess whether these neurons and synapses are functional, the team collaborated with Cedric Bardy’s group at SAHMRI and Flinders University, Australia, to investigate if neurons in this system would start to form functional neural networks. Indeed, when the researchers stimulated the midbrain, which contains dopaminergic neurons, neurons in the striatum and cortex responded to the stimulation. “We successfully modeled the dopaminergic circuit in vitro, as the cells not only wire correctly, but also function together,” Reumann says.

The organoid model of the dopaminergic system could be used to improve cell therapies for Parkinson’s disease. In first clinical studies, researchers have injected precursors of dopaminergic neurons into the striatum, to try and make up for the lost natural innervation.

However, these studies have had mixed success. In collaboration with the lab of Malin Parmar at Lund University, Sweden, the team demonstrated that dopaminergic progenitor cells injected into the dopaminergic organoid model mature into neurons and extend neuronal projections within the organoid.

“Our organoid system could serve as a platform to test conditions for cell therapies, allowing us to observe how precursor cells behave in a three-dimensional human environment,” Jürgen Knoblich, the study’s corresponding author, explains. “This allows researchers to study how progenitors can be differentiated more efficiently and provides a platform which allows [us] to study how to recruit dopaminergic axons to target regions, all in a high-throughput manner.” Insights into the reward system

Dopaminergic neurons also fire whenever we feel rewarded, thus forming the basis of the “reward pathway” in our brains. But what happens when dopaminergic signaling is perturbed, such as in addiction? To investigate this question, the researchers made use of a well-known dopamine reuptake inhibitor, cocaine. When the organoids were exposed to cocaine chronically over 80 days, the dopaminergic circuit changed functionally, morphologically, and transcriptionally.

These changes persisted, even when cocaine exposure was stopped 25 days before the end of the experiment, which simulated the withdrawal condition. “Even after almost a month after stopping cocaine exposure, the effects of cocaine on the dopaminergic circuit were still visible, which means that we can now investigate what the long-term effects of dopaminergic overstimulation are in a human-specific in vitro system,” Reumann says.

Provided by IMBA- Institute of Molecular Biotechnology of the Austrian Academy of Sciences

Brain’s Reward Pathway Unlocked: Revealing the Secrets of the Dopaminergic System

Scientists developed a revolutionary organoid model of the dopaminergic system, providing significant insights into Parkinson’s disease and the long-lasting effects of cocaine on the brain. This model is a promising tool for advancing Parkinson’s disease treatments and understanding the enduring impact of drug addiction. Breakthrough organoid model replicates essential neural network.

A new organoid model of the dopaminergic system sheds light on its intricate functionality and potential implications for Parkinson’s disease. The model, developed by the group of Jürgen Knoblich at the Institute of Molecular Biotechnology (IMBA) of the Austrian Academy of Sciences, replicates the dopaminergic system’s structure, connectivity, and functionality. The study, published on December 5 in Nature Methods, also uncovers the enduring effects of chronic cocaine exposure on the dopaminergic circuit, even after withdrawal. Dopamine’s Role in Reward and Motor Control

A completed run, the early morning hit of caffeine, the smell of cookies in the oven — these rewarding moments are all due to a hit of the neurotransmitter dopamine, released by neurons in a neural network in our brain, called the “dopaminergic reward pathway.”

Apart from mediating the feeling of “reward,” dopaminergic neurons also play a crucial role in fine motor control, which is lost in diseases such as Parkinson’s disease. Despite dopamine’s importance, key features of the system are not yet understood, and no cure for Parkinson’s disease exists. In their new study, the group of Jürgen Knoblich at IMBA developed an organoid model of the dopaminergic system, which not only recapitulates the system’s morphology and nerve projections, but also its functionality. Dopaminergic neurons in the ventral midbrain (red) and ventral midbrain projections into striatal and cortical tissue (green). Credit: (c) Daniel Reumann/IMBA Understanding Parkinson’s Disease Through the Model

Tremor and a loss of motor control are characteristic symptoms of Parkinson’s disease and are due to a loss of neurons that release the neurotransmitter dopamine, called dopaminergic neurons. When dopaminergic neurons die, fine motor control is lost and patients develop tremors and uncontrollable movements. Although the loss of dopaminergic neurons is crucial in the development of Parkinson’s disease, the mechanisms how this happens, and how we can prevent – or even repair – the dopaminergic system is not yet understood.

Animal models for Parkinson’s disease have provided some insight into Parkinson’s disease, however as rodents do not naturally develop Parkinson’s disease, animal studies proved unsatisfactory in recapitulating hallmark features of the disease. In addition, the human brain contains many more dopaminergic neurons, which also wire up differently within the human brain, sending projections to the striatum and the cortex.

“We sought to develop an in vitro model that recapitulates these human features in so-called brain organoids,” explains Daniel Reumann, previously a PhD student in the lab of Jürgen Knoblich at IMBA, and first author of the paper. “Brain organoids are human stem cell-derived three-dimensional structures, which can be used to understand both human brain development, as well as function,” he explains further. Developing and Testing the Organoid Model

The team first developed organoid models of the so-called ventral midbrain, striatum, and cortex – the regions linked by neurons in the dopaminergic system – and then developed a method for fusing these organoids together. As happens in the human brain, the dopaminergic neurons of the midbrain organoid send out projections to the striatum and the cortex organoids. “Somewhat surprisingly, we observed a high level of dopaminergic innervation, as well as synapses forming between dopaminergic neurons and neurons in striatum and cortex,” Reumann recalls.

To assess whether these neurons and synapses are functional, the team collaborated with Cedric Bardy’s group at SAHMRI and Flinders University, Australia, to investigate if neurons in this system would start to form functional neural networks. And indeed, when the researchers stimulated the midbrain which contains dopaminergic neurons, neurons in the striatum and cortex responded to the stimulation. “We successfully modelled the dopaminergic circuit in vitro, as the cells not only wire correctly, but also function together,” Reumann sums up. Potential Applications in Parkinson’s Disease Therapy

The organoid model of the dopaminergic system could be used to improve cell therapies for Parkinson’s disease. In first clinical studies, researchers have injected precursors of dopaminergic neurons into the striatum, to try and make up for the lost natural innervation. However, these studies have had mixed success. In collaboration with the lab of Malin Parmar at Lund University, Sweden, the team demonstrated that dopaminergic progenitor cells injected into the dopaminergic organoid model mature into neurons and extend neuronal projections within the organoid.

“Our organoid system could serve as a platform to test conditions for cell therapies, allowing us to observe how precursor cells behave in a three-dimensional human environment,” Jürgen Knoblich, the study’s corresponding author, explains. “This allows researchers to study how progenitors can be differentiated more efficiently and provides a platform which allows to study how to recruit dopaminergic axons to target regions, all in a high-throughput manner.” Insights Into the Reward System

Dopaminergic neurons also fire whenever we feel rewarded, thus forming the basis of the “reward pathway” in our brains. But what happens when dopaminergic signaling is perturbed, such as in addiction? To investigate this question, the researchers made use of a well-known dopamine reuptake inhibitor, cocaine. When the organoids were exposed to cocaine chronically, over 80 days, the dopaminergic circuit changed functionally, morphologically and transcriptionally. These changes persisted, even when cocaine exposure was stopped 25 days before the end of the experiment, which simulated the withdrawal condition.

“Even after almost a month after stopping cocaine exposure, the effects of cocaine on the dopaminergic circuit were still visible, which means that we can now investigate what the long-term effects of dopaminergic overstimulation are in a human-specific in vitro system,” Reumann

Reference: “In vitro modeling of the human dopaminergic system using spatially arranged ventral midbrain–striatum–cortex assembloids” 5 December 2023, Nature Methods .

DOI: 10.1038/s41592-023-02080-x

Funding: Austrian Academy of Sciences, Austrian Federal Ministry of Education, Science and Research, City of Vienna, H2020 European Research Council, Austrian Science Fund, Austrian Lotteries, New York Stem Cell Foundation, H2020 European Research Council, Swedish Research […]

Exercise Boosts Brain Power—Neuroscientists Don’t Know How

Key points

Moderate-intensity aerobic exercise (cardio) increases prefrontal cortex oxygenation and boosts brain power.

Even with an insufficient oxygen supply or acute hypoxia, doing cardio can improve cognitive performance.

Increased cerebral blood flow alone may not explain why aerobic exercise improves executive function.

Vitalii Petrenko/Shutterstock My neuroscientist father was always quick to admit that despite decades of brain research, he still didn’t really know how certain lifestyle choices and daily habits improved or diminished cognitive performance.

As a collegiate athlete, Dad learned anecdotally that exercise and a good night’s sleep helped him think better and facilitated peak performance on exams. But later, after graduating medical school, when he performed animal experiments designed to pinpoint how things like exercise and sleep influenced specific brain functions, his research often raised more new questions than it answered.

New human research ( Williams et al., 2023 ) on how moderate-intensity aerobic exercise and sleep deprivation affect cognitive performance answers a few questions but also casts doubt on a widely-held belief that increased oxygen levels in the prefrontal cortex during cardio is the prime driving force of exercise-induced improvements in executive function. This in-press Physiology & Behavior paper was made available online on November 17. 20 Minutes of Cardio Is Enough to Boost Cognitive Performance

This two-pronged study had participants perform cognitive tests that assessed various executive functions under different conditions, including not getting enough sleep and doing cardio in a hypoxic chamber with very little oxygen that mimicked high-altitude conditions.

As expected, exercise improved cognition in almost every situation. Interestingly, small doses (20 mins at moderate intensity) of aerobic exercise could compensate for lower scores on cognitive tasks due to lack of sleep.

Although increased oxygen in the prefrontal cortex was associated with better cognitive performance for most, even when people were experiencing acute hypoxia and their brain was starved of oxygen while doing moderate-intensity aerobic activity, many still did better on cognitive tasks than if they’d been sitting still. “Regardless of sleep or hypoxic status, executive functions are improved during an acute bout of moderate-intensity exercise,” the authors explain. Cerebral Oxygenation From Cardio Isn’t Needed to Boost Executive Functions

Much like a new discovery in my father’s lab often unearthed fresh unknowns, the latest (2023) study by Williams et al. shows that just 20 minutes of exercise can increase cognitive performance even after a poor night’s sleep but the research findings also suggest that increased cerebral oxygenation during cardio isn’t the only way exercise boosts brain power.

This hypoxia study adds to growing evidence suggesting that, contrary to popular belief, exercise-induced cortical oxygenation alone doesn’t explain why moderate-intensity aerobic activity enhances executive function.

“One potential hypothesis for why exercise improves cognitive performance is related to the increase in cerebral blood flow and oxygenation; however, our findings suggest that even when exercise is performed in an environment with low levels of oxygen, participants were still able to perform cognitive tasks better than when at rest in the same conditions,” Thomas Williams explained in a November 2023 news release .

In their recent paper, Williams and co-authors emphasize that although the prefrontal cortex (PFC) is often considered the primary brain region associated with executive function, solid cognitive performance isn’t solely dependent upon the PFC. Rather, they state that optimizing executive functions is “the product of a series of coordinated processes widely distributed across cortical and subcortical brain regions, interconnected through a series of complex neural networks.”

Even though hypoxia reduces prefrontal cortex oxygenation during aerobic activity, the researchers speculate that other brain regions may compensate when the frontal lobes aren’t getting enough oxygen. That said, neuroscientists aren’t exactly sure how different brain regions compensate for cortical deoxygenation when someone doing cardio experiences acute hypoxia.

Williams et al.’s latest findings dovetail with another recent human study ( Moriarity et al., 2019 ), which found that although moderate-intensity aerobic exercise increased prefrontal oxygenation, having more oxygen in cortical brain areas wasn’t necessarily associated with improved cognitive performance.

In fact, the researchers found that higher-intensity exercise (which delivered more oxygen to the brain’s frontal lobes) was associated with slower processing speeds and a reduction in fluid intelligence test scores. Cardio Strengthens Cerebro-Cerebellar Circuitry

The prefrontal cortex is located in the cerebrum’s frontal lobes. The cerebellum is tucked underneath the cerebrum. Cerebral means “relating to the cerebrum.” Cerebellar means “relating to the cerebellum.” Source: BlueRingMedia/Shutterstock

Other research on how exercise boosts brain power suggests moderate-to-vigorous physical activity (MVPA) improves functional connectivity between the frontal lobes and the cerebellum. Making neural circuitry between cortical and subcortical areas more robust may help the entire brain work in concert to coordinate thinking processes better.

Beyond cortical oxygenation, improved functional connectivity between the cerebellum and cerebrum may help explain how rhythmic aerobic exercise improves executive functions regardless of hypoxic status. (See ” Cerebro-Cerebellar Circuits Remind Us: To Know Is Not Enough “)

A few years ago, researchers ( Li et al., 2019 ) found that 30 minutes of aerobic exercise altered the role of the cerebellum’s right hemisphere when processing simple executive tasks. As Lin Li and co-authors explain, “It is plausible that acute exercise might alter the pattern of functional connectivity between cerebellar loops and cognition-related areas of the prefrontal cortex, and thereby further improve the performance of executive function after exercise.” Take-Home Message: More Research Needed

Until recently, it was widely believed that increased prefrontal cortex oxygenation was the primary way moderate-intensity aerobic activity improved cognitive performance. Mountains of evidence suggest that increasing oxygen to the frontal lobes via cardio boosts brain power. However, the latest (2023) findings also imply that cerebral oxygenation alone doesn’t explain why exercise improves executive functions.

More neuroscientific research is needed to identify all the exercise-related neural mechanisms—from prefrontal cortex oxygenation to improved cerebro-cerebellar functional connectivity—that may boost brain power. Hopefully, future studies will elucidate the role cortical and subcortical regions, such as the cerebellum, play in exercise-related upticks in cognitive performance.

References

Thomas B. Williams, Juan I. Badariotti, Jo Corbett, Matt Miller-Dicks, Emma Neupert, Terry McMorris, Soichi Ando, Matthew O. Parker, Richard C. Thelwell, Adam O. Causer, John S. Young, […]

Probe Into Brain’s Memory Center Could Solve Mystery of Alzheimer’s Cause

U.S. researchers are developing a new tool—a multipurpose fiber that will provide a window into the deep brain—with the goal of learning how to slow down or even reverse memory loss in Alzheimer’s disease.

Barely thicker than a strand of human hair, the tiny probe will be implanted into the brains of first mice, and potentially later humans, to help researchers study the buildup of proteins suspected to cause Alzheimer’s and test treatments to combat this.

According to the Alzheimer’s Association, more than 6 million Americans are currently living with the disease—a number that, on average, increases nearly every minute and is expected to quadruple by 2050.

The condition, the most common form of dementia, affects memory, cognitive ability and behavior, and it is progressive, meaning it worsens with time. At present, there is no cure. An artist’s impression of amyloid plaques The research is being undertaken by professors Xiaoting Jia and Harald Sontheimer of Virginia Tech and professor Song Hu of the Washington University in St. Louis.

Jia has witnessed the devastating impact of Alzheimer’s firsthand, after her grandmother developed the debilitating disease.

“Alzheimer’s is a devastating problem—I’ve seen firsthand how bad it could be,” the researcher explained in a statement.

This experience, she added, is why “it concerns me as an electrical engineer. I want to build tools and try to assist neuroscientists in solving brain problems.” The tool in question the trio are developing will allow researchers to explore the relationship between Alzheimer’s disease and the build-up of protein clumps known as “amyloids”, which are thought to disrupt cell function in the brain and even cause cell death.

Sign up for Newsweek’s daily headlines

As Jia explains: “Amyloid deposits are the main feature for Alzheimer’s disease—and they begin developing years, even decades, before people show symptoms. It’s still a mystery how the deposits even begin.”

In fact, Jia notes, so far scientists have only proven that there is a correlation between the accumulation of amyloid plaques and Alzheimer’s.

It remains to be confirmed if the two have a causal link, and exactly what form this might take.

Scientists have observed that, early in the disease, the formation of amyloid deposits is accompanied by a reduction in the brain’s blood flow. Jia and her colleagues believe that this may starve neurons in the hippocampus of much-needed oxygen. Engineer Xiaoting Jia with an example fiber “A big problem in Alzheimer’s research is there are a lot of dysfunctions in the brain having to do with neurovascular changes, but we don’t totally understand how those changes impact memory loss and behaviors that eventually impair their life,” said Hu.

The biomedical engineer added: “Conventional techniques have provided an important understanding of neurons and vasculature, but there’s a technology limitation.”

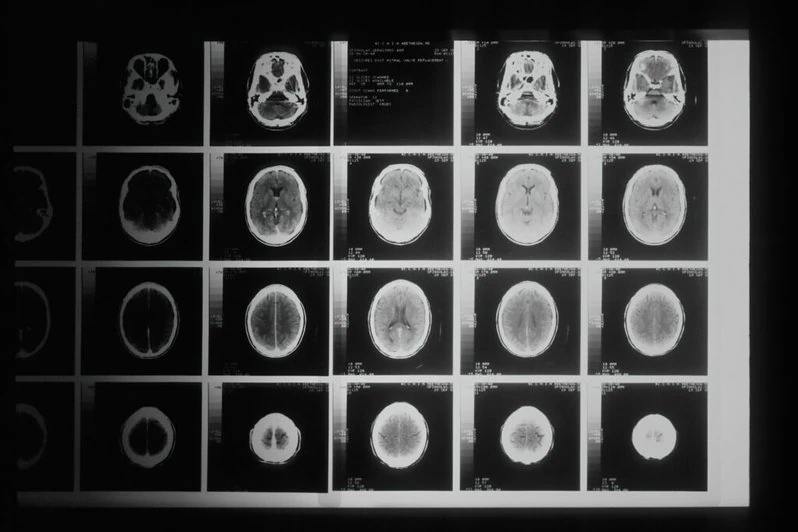

Neuroscientists commonly use tools like magnetic resonance imaging (MRI) machines and electroencephalograms (EEG) to look inside the brain and measure its activity, but these techniques have limited spatial and temporal resolutions.

Other techniques are more precise, but more invasive too. Deep brain electrical stimulation, for example—which has been suggested could help reverse memory loss in Alzheimer’s disease—is typically conducted by surgically inserting electrodes into the head. A close-up of the example fiber The researchers’ fiber tool, in contrast, should offer a better look inside the brain, while calling for fewer and less complicated invasive surgeries.

Made of a flexible and durable polymer, the fiber should have long-lasting potential, allowing for ongoing studies of a given subject, while also carrying little to no risk of damage to the surrounding brain tissue.

It will enable a special camera known as an endoscope to take two different types of picture within the brain, allowing researchers to observe not only neuroactivity, but also the aggregation of amyloid deposits and blood flow within the brain.

“What we are doing here, together, is creating a device with which we can visualize the buildup of biomarkers that are the culprits of Alzheimer’s disease,” said Sontheimer.

The neuroscientist added: “Usually, you can’t access or image that part of the brain, but this device will provide access to the hippocampus—home of spatial memory and retention.”

Furthermore, the fiber’s hollow core could also be used to deliver either electrical pulses to the brain or drugs designed to combat amyloid buildup in the hopes of restoring blood flow in the brain, freeing-up communication between neurons, and restoring memory. Preform used to make the fiber The team is very much on deadline, having been given a high priority, 1-year grant by the National Institutes of Health—to the total sum of nearly $800,000—to create and test two prototype fibers.

If they meet these targets successfully, however, they may be able to apply for additional, multi-year funding to further develop their tool.

“This is a very ambitious goal, what we’re trying to do in one year,” Jia said.

She concluded: “The brain is very nuanced, with more than 80 billion neurons, and we’re still behind on fully understanding how the brain functions and how diseases are formed.” Xioating Jia at work in her laboratory Mark Dallas—a professor of cellular neuroscience from the University of Reading in England who is not involved in the study—told Newsweek : “This exciting research looks to target Alzheimer’s disease on several fronts and for the first time will provide an insight into real-time changes in the brain as the disease takes hold.

“Going forward it will also open up the potential to deliver a payload to tackle the disease at onset, rather than having to wait for wholescale changes to be evident.”

Molecular biologist professor Bart De Strooper of University College London—who was also not involved in the study—told Newsweek : “There is a thick skull around the brain, which makes it difficult to pick up the early signs of disease and the acute changes in the brain upon drug delivery.

“I do not know whether this team will succeed, but it is certainly a fantastic way forward. We need more sensitive and more direct ways to diagnose Alzheimer’s disease before it actually starts to cause dementia, but we currently have little tools to do so.”His colleague Dr. Marc Busche agreed, adding: “It […]

Hunger Games: How Gut Hormones Hijack the Brain’s Decision Desk

Scientists have discovered that a hunger hormone in the gut directly influences the brain’s hippocampus, affecting decision-making related to food. The study, conducted on mice, showed that hunger hormones modify brain activity to either inhibit or permit eating based on the animal’s hunger level. Researchers have found that hunger hormones in the gut directly affect the brain’s hippocampus, influencing eating decisions. This discovery, made through a study on mice, shows how the brain regulates eating based on hunger levels and could have implications for understanding and treating eating disorders.

A hunger hormone produced in the gut can directly impact a decision-making part of the brain in order to drive an animal’s behavior, finds a new study by UCL (University College London) researchers.

The study in mice, published in the journal Neuron , is the first to show how hunger hormones can directly impact activity of the brain’s hippocampus when an animal is considering food. Study Findings and Implications

Lead author Dr. Andrew MacAskill (UCL Neuroscience, Physiology & Pharmacology) said: “We all know our decisions can be deeply influenced by our hunger, as food has a different meaning depending on whether we are hungry or full. Just think of how much you might buy when grocery shopping on an empty stomach. But what may seem like a simple concept is actually very complicated in reality; it requires the ability to use what’s called ‘contextual learning’.

“We found that a part of the brain that is crucial for decision-making is surprisingly sensitive to the levels of hunger hormones produced in our gut, which we believe is helping our brains to contextualize our eating choices.”

For the study, the researchers put mice in an arena that had some food, and looked at how the mice acted when they were hungry or full, while imaging their brains in real time to investigate neural activity. All of the mice spent time investigating the food, but only the hungry animals would then begin eating.

The researchers were focusing on brain activity in the ventral hippocampus (the underside of the hippocampus), a decision-making part of the brain that is understood to help us form and use memories to guide our behavior.

The scientists found that activity in a subset of brain cells in the ventral hippocampus increased when animals approached food, and this activity inhibited the animal from eating.

But if the mouse was hungry, there was less neural activity in this area, so the hippocampus no longer stopped the animal from eating. The researchers found this corresponded to high levels of the hunger hormone ghrelin circulating in the blood. Experimental Insights and Broader Implications

Adding further clarity, the UCL researchers were able to experimentally make mice behave as if they were full, by activating these ventral hippocampal neurons, leading animals to stop eating even if they were hungry. The scientists achieved this result again by removing the receptors for the hunger hormone ghrelin from these neurons.

Prior studies have shown that the hippocampus of animals, including non-human primates, has receptors for ghrelin, but there was scant evidence for how these receptors work.

This finding has demonstrated how ghrelin receptors in the brain are put to use, showing the hunger hormone can cross the blood-brain barrier (which strictly restricts many substances in the blood from reaching the brain) and directly impact the brain to drive activity, controlling a circuit in the brain that is likely to be the same or similar in humans. Future Research Directions

Dr. MacAskill added: “It appears that the hippocampus puts the brakes on an animal’s instinct to eat when it encounters food, to ensure that the animal does not overeat – but if the animal is indeed hungry, hormones will direct the brain to switch off the brakes, so the animal goes ahead and begins eating.”

The scientists are continuing their research by investigating whether hunger can impact learning or memory, by seeing if mice perform non-food-specific tasks differently depending on how hungry they are. They say additional research might also shed light on whether there are similar mechanisms at play for stress or thirst.

The researchers hope their findings could contribute to research into the mechanisms of eating disorders, to see if ghrelin receptors in the hippocampus might be implicated, as well as with other links between diet and other health outcomes such as risk of mental illnesses.

First author Dr. Ryan Wee (UCL Neuroscience, Physiology & Pharmacology) said: “Being able to make decisions based on how hungry we are is very important. If this goes wrong it can lead to serious health problems. We hope that by improving our understanding of how this works in the brain, we might be able to aid in the prevention and treatment of eating disorders.”

Reference: “Internal-state-dependent control of feeding behavior via hippocampal ghrelin signaling” by Ryan W.S. Wee, Karyna Mishchanchuk, Rawan AlSubaie, Timothy W. Church, Matthew G. Gold and Andrew F. MacAskill, 16 November 2023, Neuron .

DOI: 10.1016/j.neuron.2023.10.016

Heartbeats and brain activity: Study provides insight into optimal windows for action and perception

(Photo credit: OpenAI’s DALL·E) A new study published in PLOS Biology suggests that our heartbeat plays a crucial role in determining our brain’s ability to perceive and react to the world around us. Researchers have discovered that during the 0.8 seconds of a heartbeat, there are optimal windows for action and perception, potentially impacting treatments for conditions like depression and stroke.

Previous research has shown that various bodily systems, including respiratory, digestive, and cardiac systems, influence our brain’s perception and action capabilities. Specifically, cardiac activity has been found to affect visual and auditory perception. However, the understanding of how cardiac activity, particularly the phases of the cardiac cycle, influences cortical and corticospinal excitability — the brain’s responsiveness — was limited. This study aimed to fill this knowledge gap.

The new study, conducted by Esra Al and colleagues at the Max Planck Institute for Human Cognitive and Brain Sciences in Germany, involved 37 healthy human volunteers aged 18 to 40. These individuals, free from neurological, cognitive, or cardiac health issues, underwent a series of non-invasive transcranial magnetic stimulation (TMS) pulses. These pulses were administered to the right side of the brain to stimulate nerve cells. The team measured the participants’ motor and cortical responses, as well as heartbeats, during the stimulation.

The researchers found that the timing of our heartbeat affects the brain’s responsiveness. They discovered that both the brain’s direct responses and muscle activities were more pronounced during the heart’s contracting phase.