Learn about brain health and nootropics to boost brain function

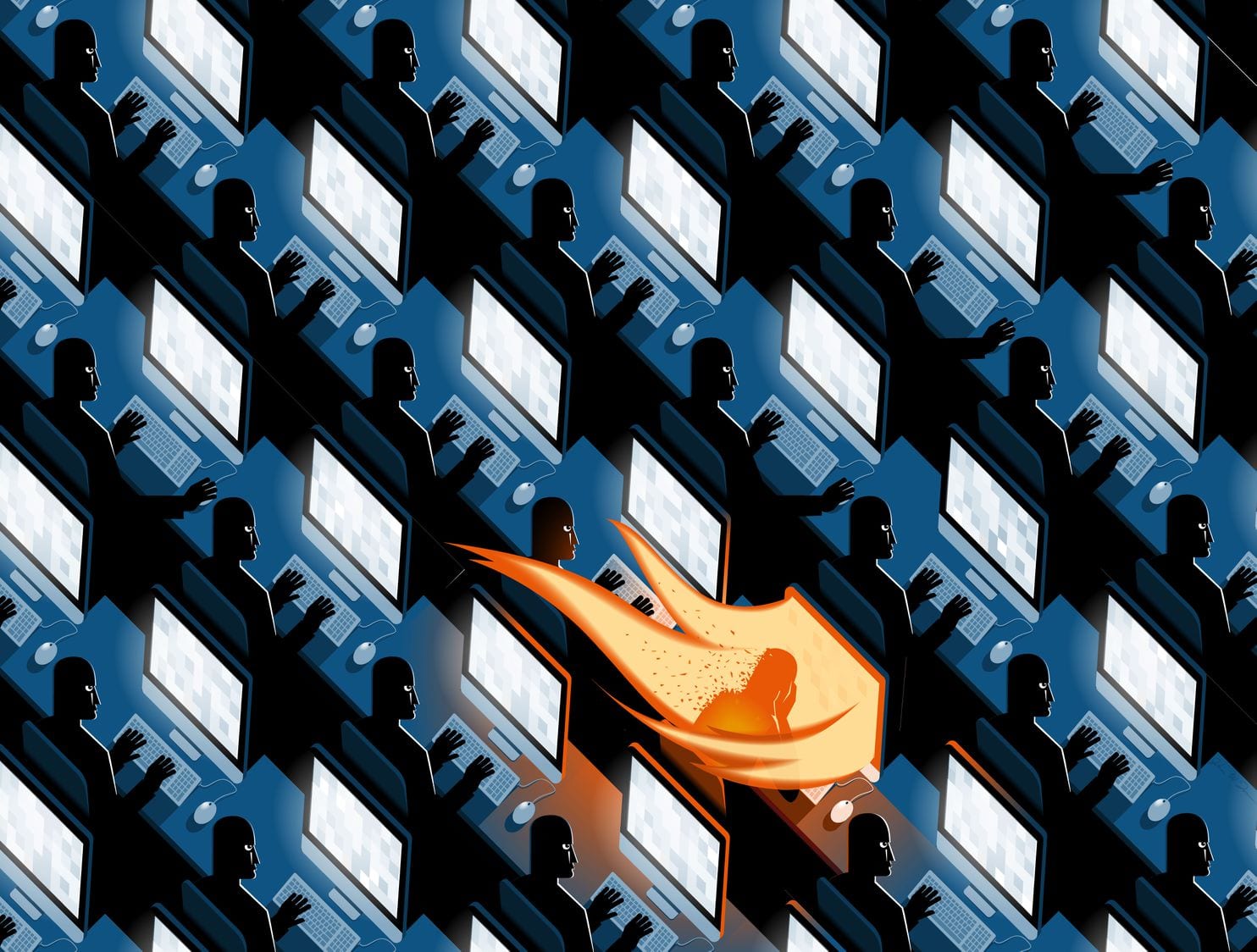

Social media companies are outsourcing their dirty work to the Philippines. A generation of workers is paying the price.

MANILA — A year after quitting his job reviewing some of the most gruesome content the Internet has to offer, Lester prays every week that the images he saw can be erased from his mind.

First as a contractor for YouTube and then for Twitter, he worked on a high-up floor of a mall in this traffic-clogged Asian capital, where he spent up to nine hours each day weighing questions about the details in those images. He made decisions about whether a child’s genitals were being touched accidentally or on purpose, or whether a knife slashing someone’s neck depicted a real-life killing — and if such content should be allowed online.

He’s still haunted by what he saw. Today, entering a tall building triggers flashbacks to the suicides he reviewed, causing him to entertain the possibility of jumping. At night, he Googles footage of bestiality and incest — material he was never exposed to before but now is ashamed that he is drawn to. For the last year, he has visited a mall chapel every week, where he works with a church brother to ask God to “white out” those images from his memory.

“I know it’s not normal, but now everything is normalized,” said the 33-year-old, using only his first name because of a confidentiality agreement he signed when he took the job.

Workers such as Lester are on the front lines of the never-ending battle to keep the Internet safe. But thousands of miles separate the Philippines and Silicon Valley, rendering these workers vulnerable to exploitation by some of the world’s tech giants.

In the last couple of years, social media companies have created tens of thousands of jobs around the world to vet and delete violent or offensive content, attempting to shore up their reputations after failing to adequately police content including live-streamed terrorist attacks and Russian disinformation spread during the U.S. presidential election. Yet the firms keep these workers at arm’s length, creating separation by employing them as contractors through giant outsourcing agencies.

Workers here say the companies do not provide adequate support to address the psychological consequences of the work. They said that they cannot confide in friends because the confidentiality agreements they signed prevent them from doing so, that it is tough to opt out of content that they see, and that daily accuracy targets create pressure not to take breaks. The tech industry has acknowledged the importance of allowing content moderators these freedoms — in 2015 signing on to a voluntary agreement to provide such options for workers who view child exploitation content, which most workers said they were exposed to.

The technology hardware industry has long operated by amassing armies of outsourced factory workers, who make the world’s smartphones and laptops under stressful working conditions, with sometimes fatal consequences. The software industry also increasingly leans on cheap and expendable labor, the unseen human toil that helps ensure that artificial intelligence voice assistants respond accurately, that self-driving systems can spot pedestrians and other objects, and that violent sex acts don’t appear in social media feeds.

The vulnerability of content moderators is most acute in the Philippines, one of the biggest and fastest-growing hubs of such work and an outgrowth of the country’s decades-old call center industry. Unlike moderators in other major hubs, such as those in India or the United States, who mostly screen content that is shared by people in those countries, workers in offices around Manila evaluate images, videos and posts from all over the world. The work places enormous burdens on them to understand foreign cultures and to moderate content in up to 10 languages that they don’t speak, while making several hundred decisions a day about what can remain online.

In interviews with The Washington Post, 14 current and former moderators in Manila described a workplace where nightmares, paranoia and obsessive ruminations were common consequences of the job. Several described seeing colleagues suffer mental breakdowns at their desks. One of them said he attempted suicide as a result of the trauma.

Several moderators call themselves silent heroes of the Internet, protecting Americans from the ills of their own society, and say they’ve become so consumed by the responsibility of keeping the Web safe that they look for harmful content in their free time to report it.

“At the end of a shift, my mind is so exhausted that I can’t even think,” said a Twitter moderator in Manila. He said he occasionally dreamed about being the victim of a suicide bombing or a car accident, his brain recycling images that he reviewed during his shift. “To do this job, you have to be a strong person and know yourself very well.”

The moderators worked for Facebook, Facebook-owned Instagram, Google-owned YouTube, Twitter and the Twitter-owned video-streaming platform Periscope, as well as other such apps, all through intermediaries such as Accenture and Cognizant. Each spoke on the condition of anonymity or agreed to the use of their first name only because of the confidentiality agreements they were required by their employers and the tech companies to sign.

In interviews, tech company officials said they had created many of the new jobs in a hurry, and acknowledged that they were still grappling with how to offer appropriate psychological care and improve workplace conditions for moderators, while managing society’s outsize expectations that they quickly remove undesirable content. The companies pointed to a series of changes they’ve made over the past year or so to address the harms. A Facebook counselor acknowledged a form of PTSD known as vicarious trauma could be a consequence of the work, and company training addresses the potential for suicidal thinking. They acknowledged there were still disconnects between policies created in Silicon Valley and the implementation of those policies at moderation sites run by third parties around the world.

The Post also spoke with one moderator in Dublin, and dozens in the United States, who recounted experiences similar to those reported in Manila. But unlike Facebook and Google contractors in the U.S., whose advocacy in recent months has helped lead the companies to promise increased wages and benefits, experts said the Filipino workers are far less likely to advocate for better working conditions. And while American workers view the job as a steppingstone to a potential career in the tech industry, Filipino workers view the dead-end job as one of the best they can get. They are also far more fearful of the consequences of breaking their confidentiality agreements.

The jobs have strong attractions for many recent college graduates here. They offer relatively good pay and provide a rare ticket to the middle class. And many workers say they walk away without any negative effects.

Though tens of thousands of people around the world spend their days reviewing horrific content, there has been little formal study of the impact of content moderators’ routine exposure to such imagery. While many Filipino workers say they became content moderators because they thought it would be easier than customer service, the work is very different from other jobs because of “the stress of hours and hours of exposure to people getting hurt, to animals getting hurt, and all sorts of hurtful violent imagery,” said Sylvia Estrada-Claudio, dean of the College of Social Work and Community Development at the University of the Philippines, who has counseled workers in the call-center industry. She said she worries about a generation of young people exposed to such disturbing material. “For people with underlying issues, it can set off a psychological crisis.”

II.

Manila’s skyline is plastered with American names, including Citigroup, Accenture, Trump Tower and dozens of lesser-known firms that are the staple of the country’s outsourcing economy. Their buildings are home to throngs of call-center workers, who, for nearly two decades, have been completing back-office tasks for English-speaking consumers in rich countries. Today, in addition to content moderation, those jobs include fielding customer-service calls for U.S. telecommunications companies and health insurers, weeding out illegal items for sale on eBay, deleting duplicate listings on Google Maps, or marking up video footage recorded by self-driving cars.

Lester had been working in call-center jobs for about a decade after getting an engineering degree from a university in Manila. Then, in 2017, he took an internal transfer within Accenture. The new job was a training position to work in content moderation for a search engine, which he later learned was YouTube. He initially thought the job moderating content online would be less stressful than dealing with irate customers over the phone, and he liked the free lunches that Accenture provided to moderators on Google accounts, he said. But he also found YouTube’s policies to be confusing and said he didn’t have access to counseling when he saw disturbing videos.

A few months later, he transferred within Accenture to work on the Twitter account because he had heard that Twitter offered better psychological support. The new job, which paid about $480 a month, gave him disposable income and meant that he was able to support his grandmother, with whom he shared a small one-bedroom house in Alabang, a suburb two hours away.

He would begin his commute at a nearby gas station alongside a seven-lane highway, where he would hail a jeepney, the ubiquitous colorful buses designed after World War II military jeeps that became popular when the country was a U.S. territory.

His 20-cent ride to work took him past factories and an abandoned resort, until the glittering high-rises of Manila became visible in the distance. To occupy himself, Lester would sometimes send out motivational Bible quotes to friends and colleagues over Facebook Messenger. Mostly, he would just catch up on sleep.

When Lester arrived to work on the Twitter account, he would put his phone and other valuables away in a locker. He would meet with his manager, who would give the team their statistics from the previous shift, such as the number of posts each person reviewed and their accuracy, as well as trending hashtags and breaking news events to monitor, such as the Pulse nightclub shooting in Orlando.

Then he would sit down in front of a large monitor that unspooled one post after another for hours at a time. The monitor tracked the number of “cases” in his “queue,” logging him out if he needed to take a bathroom break. Moderators for Twitter were often expected to review as many as 1,000 items a day, which includes individual tweets and replies and messages, according to current and former workers.

Lester said he had no control over what post he was going to see — whether the feed would show him an Islamic State murder or a child being forced into sex with an animal or an anti-Trump screed. He had no ability to blur or minimize the images, which are about the size of a postcard, or to toggle to a different screen for a mental breather, because the computer was not connected to the Internet.

“Too many to count,” he said, when asked to estimate the number of violent images he saw over the course of eight months of reviewing Twitter and YouTube content. One unforgettable video, of what he assumed was a gang-related murder in Africa, showed a group of men dragging a man into a forest and repeatedly slashing his throat with a large knife until blood covered the camera lens. Lester estimates that he reviewed roughly 10 murders a month. He reviewed at least 1,000 pieces of content related to suicide, he said, which included mostly photos and written posts of people crying for help or announcing their plans to kill themselves.

Other workers weren’t able to look away. “We’re the front-liners, like the 9/11 first responders,” said Jerome, another former moderator, who reviewed videos on Periscope, the video-streaming app owned by Twitter, until November 2018. One Indonesian moderator who censored content about his home country said he took comfort knowing that he was preventing radicalization every time he took down a post containing an ISIS flag.

After a while, Lester noticed that his work was beginning to take a toll on his well-being. It was reigniting feelings of depression that he had struggled with in his 20s, and he started to think about suicide again. He said he could not afford mental health treatment and didn’t seek it. At the same time, he was ashamed and disturbed to discover that some of the new sexual imagery to which he was being exposed aroused him.

Lester has not attempted suicide, but a former Facebook and Instagram moderator interviewed by The Post said the job influenced him to do so. The moderator took the job at the same time that he was going through family problems, and said the content he saw worsened his psychological state. He quit in 2015 after three months, and he attempted suicide the following year in a motel room, reenacting a scene he had reviewed the year before. His friends talked him down over the phone. “If I had stayed in that job, I’d be dead right now,” he said.

The night before Facebook, Google and Twitter testified before Congress to account for Russian interference on their platforms, in autumn 2017, Facebook chief Mark Zuckerberg announced that the company would hire 10,000 new workers in safety and security to review content on the site, bringing the total to 20,000 in a year. (Half of the 30,000 people who now review content for Facebook are contractors.) A month later, YouTube and Google promised 10,000 jobs by the end of 2018. Twitter, which is a much smaller company, began ramping up its content moderation, doubling its contractor workforce to 1,500 people over the following year.

After the tech firms’ proclamations, online job boards in Manila began to light up with openings from contracting companies for “moderator,” “data analyst,” “content quality editor,” as firms went on a hiring spree, said Sarah T. Roberts, assistant professor at UCLA and author of the book “Behind the Screen: Content Moderation in the Shadows of Social Media.” Some tech companies already had offices there, but after the Russia hearings, growth in content moderation accelerated a hundredfold, according to an industry executive who works in the U.S. and the Philippines.

The Philippines was a natural site to expand moderation operations because the former U.S. territory is English-speaking and has a two-decade history with call centers. In 2012, Facebook opened up a content moderation office in the Philippines through Accenture, which already had large call-center operations there. Twitter’s first Manila site opened in 2015 through the outsourcing company Cognizant.

Nicole Wong, Google’s former deputy general counsel, who helped create the company’s early policy-enforcement teams starting in 2004, said she was initially reluctant to outsource operations because she felt that the job required nuanced legal and cultural understandings that would be challenging for contract workers overseas to parse without strong oversight — and that the result could lead to bad judgment calls. But as technology companies expanded, they needed round-the-clock moderation and support for many languages.

Although moderators in the United States and Europe told The Post that they were asked to review content primarily in languages that they speak, Filipino moderators who spoke only Tagalog and English said that they were commonly asked to review content in as many as 10 languages. They said they would consult with a fluent speaker if one was available at work, but otherwise relied on Google Translate and Urban Dictionary, tools that sometimes exacerbated their confusion and created stress.

Content reviewers were initially important in countries where tech companies can be held criminally liable for content hosted on their platforms, such as Germany, Australia, France and Turkey. The U.S. has much looser rules for Internet platforms, but in the past two years, the American public and lawmakers have put enormous pressure on the technology industry to clean up a host of ills — fueling the rapid growth in moderator hiring.

Facebook’s moderator sites run by outsourcing companies are now spread across 20 countries, including Latvia and Kenya, and Twitter is in eight. (YouTube declined to answer questions about its operations, including the locations of content moderation centers.) Facebook says the Philippines and India are its largest overseas hubs.

Donald Hicks, vice president of Twitter Service, said in an interview that it is a challenge to balance emotionally sensitive work that requires breaks and psychological support with the need to meet metrics and performance goals. After he was hired to overhaul Twitter’s operations in early 2018, he focused on transitioning the work environment from a more “mechanical” call center to one that focused on the well-being of agents. “We’re not willing to build these huge farms,” he said.

In 2015, Facebook, Google, Twitter and other companies came together as part of an industry group called the Technology Coalition to recommend guidelines for reviewers that focused on child exploitation, which was the industry’s biggest focus at the time and one of the only areas in which tech firms were legally required to take down content.

The voluntary guidelines were an important acknowledgment of the psychological burdens of the job and, according to industry representatives, the only such attempt to create common standards to which companies could be held accountable. They called on firms to develop a workplace “resilience program” that allows workers to opt out of seeing the disturbing content, take breaks when they want to and confide in friends about the difficulties of the job. It recommended letting workers switch to a different type of task for an hour to get a mental breather and to make counseling “readily” and “easily” available.

Workers who had exposure to child exploitation and to other disturbing material said that in practice, the guidelines aren’t followed, adding that they weren’t even aware of the recommendations. (The guidelines were recently removed from the Technology Coalition’s website.)

Some moderators in the Philippines said they never encountered a counselor at work. Some said they had access to a counselor once a month or once every six months, while moderators in the United States say they can book time with a counselor once a week.

Lester and other moderators described how there was practically no way in the moment to opt out of reviewing content they might find objectionable because the software fed a constant stream of tickets, and people never knew what they were going to get. They could, however, request to be switched to another team that reviewed different subject matter.

Though all the companies deny that they have quotas for reviewing a certain number of posts per day, workers said they still felt pressure to meet targets for accuracy and review as many posts as possible during a shift, which made it harder to take allotted breaks. The effect, they said, was similar to a quota. Companies regularly audit moderators to ensure they are deleting content in accordance with company standards and expect moderators to maintain accuracy levels over 95 percent.

[Facebook raising pay and support services for thousands of contractors and content moderators]

Facebook, which has faced the most scrutiny of any company, has made the most visible efforts to improve conditions for content moderators, such as attempting to end quotas for reviewing posts, raising wages for U.S. moderators, and hiring psychologists to design worker “resiliency” programs around the world.

The social network says it is planning to provide unlimited access to in-person counseling to its moderators, at all hours and in all offices around the world, and is exploring offering counseling options after workers leave the job. Facebook is also in the process of creating ways to evaluate workers on multiple metrics, which it hopes will reduce the pressure for accuracy that workers experience.

YouTube says moderators worldwide have “regular” access to counseling, and that they do not review content for more than five hours a day. But it declined to provide specific information.

Twitter says it now offers daily counseling and has instructed outsourcing companies to begin offering additional psychological support to workers after they leave the job. Hicks said that moderators all over the world have the freedom to leave their shifts early if they see a piece of content that disturbs them — and that they will be paid for the entire shift regardless.

Twitter and Facebook also defended the use of confidentiality agreements, saying such agreements were necessary to protect the privacy of users and the moderators, who have been attacked for their decisions in the past.

“We’re proud of our people and the work we do in Manila — partnering with our clients in a collective effort to help make the Internet safer,” said Accenture spokeswoman Rachel Frey. “We have a leading program, offering proactive and on-demand counseling, which we continually review, benchmark and invest in to create the most supportive workplace environment.” She declined to say how long on-demand counseling had been available.

“For our associates who opt to work in content moderation we offer a variety of support options, including 24/7 phone support, access to off-site counselors and regularly scheduled on-site counselors,” Cognizant said in an emailed statement. “Given the dynamic and constantly evolving trends in this relatively new area of work, we continue to work with leading HR and wellness consultants, as well as our clients, to develop and implement the next generation of wellness practices.”

Despite call centers being a common form of employment in the Philippines, only recently did workers start to make concerted efforts at unionization, said Jan Padios, an associate professor at the University of Maryland at College Park who has written a book on call-center workers in the Philippines.

The accuracy quotas, surveillance metrics and other mechanized routines of call-center work — honed for years by IT outsourcing companies — are ill-suited to a job that should require breaks, psychological support and time to process emotionally harrowing material, said Stefania Pifer, a psychologist who runs the Workplace Wellness Project, a San Francisco-based consulting service for social media companies. “It’s an old type of model struggling to fit into a new type of work,” she said. “And it can lead to unethical working conditions.”

IV.

Silicon Valley leaders talk constantly of automating labor, and in the Philippine call-center industry, having technology take over a person’s job is a fact of life. Content moderators, like other call-center workers, assume they are expendable.

Estrada-Claudio said that the expectation of automation is preventing a broader call for labor rights for moderators. “What the industry leaders are telling us is that automation will take over and we’re going to lose all those jobs anyway, so how do you fight and say you need more benefits?” she said.

But tech companies are starting to acknowledge that they may never reach full automation for moderation. “I think there will always be people” making judgment calls over content, Facebook’s Zuckerberg said in a Washington Post interview in April.

The result of this admission is that tech companies are creating a permanent job — dependent on invisible outsourced workers — with the possibility for serious trauma, with little oversight and few answers about how to address it.

At the same time, experts here believe the firms cannot possibly hire enough content moderators around the world to solve the enormous problems they face.

For at least some Filipino workers, the shift to automation can’t come quickly enough. Lester, who now works in a call center selling life insurance, says he has been pushing his former colleagues to quit moderation. In his free time, he hangs out with friends at the mall and tries to avoid skyscrapers, which is nearly impossible in Manila.

Content moderation, he said, should be left to the robots. “People think, because we’re Filipinos, we are happy people. We can adapt,” he said. “But this stays in our heads forever. . . . They should turn these jobs into machines.”

Your daily guide to the intersection of technology and politics, by reporter Cat Zakrzewski.

Click here to view full article