Learn about brain health and nootropics to boost brain function

OpenAI and DeepMind teach AI teamwork by playing hide-and-seek

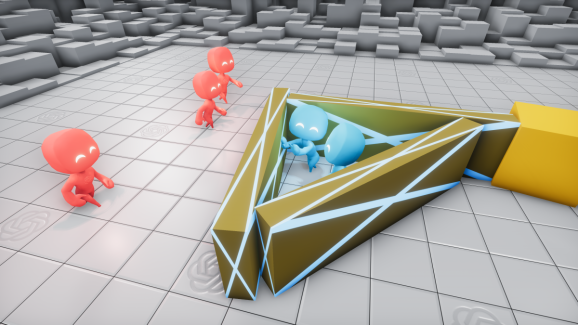

The age-old game of hide-and-seek can reveal a lot about how AI weighs decisions with which it’s faced, not to mention why it interacts the way it does with other AI within its sphere of influence — or its proximity. That’s the gist of a new paper published by researchers at Google parent company Alphabet subsidiary DeepMind and OpenAI, the San Francisco-based AI research firm that has backing from luminaries like LinkedIn cofounder Reid Hoffman. The paper describes how hordes of AI-controlled agents set loose in a virtual environment learned increasingly sophisticated ways to hide from and seek each other. Results from tests show that two-agent teams in competition self-improved at a rate faster than any single agent, which the coauthors say is an indication the forces at play could be harnessed and adapted to other AI domains to bolster efficiency.

The hide-and-seek AI training environment, which was made available in open source today, joins the countless others OpenAI, DeepMind, and DeepMind sister company Google have contributed to crowdsource solutions to hard problems in AI. In December, OpenAI published CoinRun, which is designed to test the adaptability of reinforcement learning agents. More recently, it debuted Neural MMO, a massive reinforcement learning simulator that plops agents in the middle of an RPG-like world. For its part in June, Google’s Google Brain division open-sourced Research Football Environment, a 3D reinforcement learning simulator for training AI to master soccer. And DeepMind last month took the wraps off of OpenSpiel, a collection of AI training tools for video games.

“Creating intelligent artificial agents that can solve a wide variety of complex human-relevant tasks has been a long-standing challenge in the artificial intelligence community,” wrote the coauthors in this latest paper. “Of particular relevance to humans will be agents that can sense and interact with objects in a physical world.”

Training and playing

The hide-and-seek agents leaned on reinforcement learning, a technique that employs rewards to drive software policies toward goals, to self-learn over repeated trials. Reinforcement learning paired with enormous compute has achieved tremendous success in recent years, but it has its limitations. Specifying reward functions or collecting demonstrations to supervise the tasks can be time-consuming and costly. (State-of-the-art methods call for supervised learning on expert demonstration data and the use of rewards to further improve performance.) Furthermore, learned skills in single-agent reinforcement tasks are constricted by the task description; once the agent learns to solve the task, there’s not much room to improve.

The researchers instead pursued a policy they describe as “undirected exploration,” where the agents freely evolved in their understanding of the game world to devise creative winning strategies. It’s akin to the multi-agent learning approach advocated by DeepMind scientists last year, in a study in which multiple AI systems were trained to play Capture the Flag on the game Quake III Arena. As with this research, the AI agents weren’t taught the rules of the game beforehand, yet they learned basic strategies over time and eventually surpassed most human players in skill.

Microsoft’s Suphx AI, OpenAI’s OpenAI Five, DeepMind’s AlphaStar, and Facebook’s Pluribus tapped similar tactics to master Mahjong, Dota 2, StarCraft II, and Texas Hold’em, respectively.

In the hide-and-seek objective at hand, several agents — the hiders — had to avoid rival agents’ lines of sight after a brief phase during which those rivals were immobilized, while the seekers were instructed to keep tabs on the hiders. (“Line of sight” in this context refers to 135-degree cones in front of individual agents.) Agents were penalized if they ventured too far outside the play area and were forced to navigate randomly generated rooms and walls, and they could pick up objects (mainly boxes) scattered throughout the environment that locked into place indefinitely.

The agents discovered as many as six unique strategies in the course of training, each of which pressured them to progress to the next game stage. At first, hiders and seekers merely ran away and chased each other, but after roughly 25 million matches of hide-and-seek, the hiders learned to construct concealing shelters by moving boxes together and against walls. After another 75 million matches, the seekers moved and used ramps to jump over the boxes and into the hiders’ shelter, and 10 million matches later, the hiders started to bring the ramps to the edge of the play area and lock them in place to prevent the seekers from using them. Finally, after 380 million total matches, the seekers taught themselves to bring boxes to the edge of the play area and effectively “surf” them to the hiders’ shelter, taking advantage of the fact that the play space allowed them to move with the box without touching the ground.

The trained agents learned to coordinate labor, for instance separately bringing their own boxes to the site of an unconstructed shelter. Moreover, they protected each other as a team, attempting to defend against the seekers’ box surfing by locking boxes in place during the preparation phase.

Scale played a critical role in this, according to the researchers. The default model required 132.3 million episodes over 34 hours of training to reach the “ramp defense” phase of skill progression, and larger batch sizes generally lead to quicker training times. Increasing the number of parameters (the part of the model learned from historical training data) from 0.5 million to 5.8 million increased sample efficiency by a factor of 2.2, for instance.

Benchmarking robustness

To evaluate the agents’ robustness, the researchers devised a suite of five benchmark intelligence tests categorized into two domains: cognition and memory. All tests use the same action space, observation space, and types of objects as the hide-and-seek environment:

- The Object Counting task measures whether agents have a sense of object permanence (the understanding that things continue to exist even when they can’t be perceived).

- The second task — Lock and Return — measures whether agents can remember their original position while performing a new task.

- In Sequential Lock, four boxes are situated in three random rooms without doors but with a ramp in each room. Agents must lock all the boxes in a particular order they haven’t before observed.

- In the Construction From Blueprint task, agents must place boxes — eight in an open room — on top of as many as four target sites.

- In the Shelter Construction task, agents have to build a shelter of boxes around a cylinder.

On three out of five of the tasks, the agents pretrained in the hide-and-seek environment learned faster and achieved a higher final reward than both baselines. They did slightly better in Lock and Return, Sequential Lock, and Construction from Blueprint, but they performed worse on Object Counting and Shelter Construction.

The researchers blame the mixed results on skill representations that were both “entangled” and tough to fine-tune. “We conjecture that tasks where hide-and-seek pretraining outperforms the baseline are due to reuse of learned feature representations, whereas better-than-baseline transfer on the remaining tasks would require reuse of learned skills, which is much more difficult,” they wrote. “This evaluation metric highlights the need for developing techniques to reuse skills effectively from a policy trained in one environment to another.”

Future work

So what’s the takeaway from this? Simple game rules, multi-agent competition, and standard reinforcement learning algorithms at scale can spur agents to learn complex strategies and skills unsupervised.

“The success in these settings inspires confidence that [these] environments could eventually enable agents to acquire an unbounded number of … skills,” wrote the researchers. “[It] leads to behavior that centers around far more human-relevant skills than other self-supervised reinforcement learning methods such as intrinsic.”

The advancements aren’t merely pushing forward game design. The researchers assert that their work is a significant step toward techniques that might yield “physically grounded” and “human-relevant” behavior, and that might support systems that diagnose illnesses, predict complicated protein structures, and segment CT scans. “[Our game-playing AI] is a stepping stone for us all the way to general AI,” Alphabet DeepMind cofounder Demis Hassabis told VentureBeat in a previous interview. “The reason we test ourselves and all these games is … that [they’re] a very convenient proving ground for us to develop our algorithms. … Ultimately, [we’re developing algorithms that can be] translate[ed] into the real world to work on really challenging problems … and help experts in those areas.”

Click here to view full article